Category Archives: Studio One

Mixing à la Studio One

Ask 100 recording engineers about their approach to mixing, and you’ll hear 100 different answers. Here’s mine, and how this approach relates to Studio One.

The Mix Is Not a Recording’s Most Important Aspect

If it was, recordings from the past with a primitive, unpolished sound wouldn’t have endured to this day. The most important aspect is tracking—the mix provides a home for the tracks. If you capture stellar instrument and vocal sounds, the mix will almost take care of itself. Granted, you can fix sounds in the mix. But because each track interacts with the other tracks to create the mix, changing any track changes its interactions with all the other tracks. If multiple tracks require major fixes, the mix may start to fall apart as different fixes conflict with each other.

So, a great mix starts with inspired tracks. When tracking and working with MIDI, enable Retrospective Recording (Preferences or Options, then Advanced/MIDI/Enable retrospective recording). If you play some dazzling MIDI part but hadn’t pressed record, no worries—Studio One will have stored what you played. For audio, create a template that lets you track audio quickly, before inspiration dissipates. It’s helpful if your audio interface has enough inputs so that you can leave your main instruments and mics always patched in. Then, simply record-enable a track, and you’re ready to record.

Start Mixing Without Plugins—But Do Any Needed DSP Fixes

Here’s one reason why you don’t want to start by adding plugins. Sound on Sound did a series called Mix Rescue where the editors would go to a home studio and give tips on how the person working there could obtain a better mix. One time the owner offered the editors some tea, and went into the kitchen to make it. Meanwhile, the SOS folks wanted to hear what the raw tracks sounded like, so they bypassed all the plugins. When the owner came back, his first question was “what did you do to make it sound so much better?” I assume the problem was that the person doing the mix started adding plugins to enhance individual tracks, without remembering the importance of all the tracks working together.

Using DSP to alter levels can optimize tracks, without altering their character the way most plugins do. For more consistent levels, particularly with vocals, use Gain Envelopes and/or selective normalizing. (Note that you can normalize Events in the Inspector.) Also, cut spaces between phrases to delete any residual noise. Edit tracks to remove sections that you may like, but don’t advance the song’s storyline. Then, the remaining parts will have more prominence.

My one exception to “no plugins at first” is if the plugins are essential to the final sound. For example, a guitar part may require an amp sim. Or, a synth arpeggio may require a dotted eighth-note delay when it’s part of the song’s rhythm section.

Obtain the Best Possible Balance of Your Tracks

While you work on the mix without plugins, get to know the song’s feel and the global context for the tracks. As you mix, you may hear sounds you want to fix. Avoid that temptation for now—keep trying to achieve the best possible balance until you can’t improve the balance any further. Personal bias alert: The more plugins you add to a track, the more they obscure the underlying sound. Sometimes this is good, sometimes it isn’t. But when mixing with a minimalist approach, you can always make additions later. If you make additions early on, they may not make sense in the context of changes that occur as you build toward the final mix.

Here’s another personal bias alert: Avoid using any master bus plugins until you’re ready to master your mix. Although master bus plugins can put a band-aid on problems while you mix, those underlying problems remain. I believe that if you aim for the best possible mix without any master bus plugins, then when you do add master bus plugins in the Project page to enhance the sound, they’ll make a great mix outstanding.

This way of working is unlike the “top-down” mixing technique that advocates mixing with master bus processors from the start. Proponents say that this not only encourages listening to the mix as a finished product, but since you’ll add master bus processors eventually, you might as well mix with them already in place. However, most top-down mixes still undergo mastering, so bus processors then become part of mixing and mastering. If that approach works for you, great! But my best mixes have separated mixing and mastering into two distinct processes. Mixing is about creating a balance among tracks. Mastering is about enhancing that balance into a refined, cohesive whole.

EQ Tracks Strategically

By now, mixing without plugins has established the song’s character. Next, it’s time to shift your focus from the forest to the trees. Identify problem areas where the tracks don’t quite gel. Use the Pro EQ to carve out sonic spaces for the tracks (fig. 1), so they don’t conflict with each other. For example, suppose the lower mids sound muddy, even though the balance sounds correct. Solo individual tracks until you identify the one that’s contributing the most “mud.” Then, use EQ to reduce its lower midrange a bit. Or, a vocal might have to be overly loud to be intelligible. In this case, a slight upper midrange boost can increase intelligibility without needing to raise the track’s overall level.

If a static boost or cut seems heavy-handed, the Pro EQ3’s dynamic equalization function introduces EQ only when needed, based on the audio’s dynamics. For more info, see the blog post Plug-In Matrimony: Pro EQ3 Weds Dynamics.

Some engineers like using a highpass filter on tracks that don’t have low-frequency energy anyway. Use the Pro EQ’s linear-phase stage, and then before adding any other effects to the track, render it to save CPU power. Traditional minimal-phase EQ can introduce phase shifts above the cutoff frequency.

Implement Needed Dynamics Control

Using EQ to help differentiate instruments means you may not need much dynamics processing. For example, after using EQ to make the vocals more intelligible, they might benefit more from light limiting than heavy compression. A little saturation on bass will give a higher average level, reduce peaks, and add harmonics. These enhancements allow the bass to stand out more without using conventional dynamics processors, or having to increase its level to where it conflicts with other instruments.

Be sparing with dynamics processing, at least initially. Mastering most pop/EDM/rock/country music involves using compression or limiting. This keeps the level in the same range as other songs that use master bus processing, and helps “glue” the tracks together. But remember, master bus processors—whether compression, EQ, maximization, or whatever—apply that processing to every track. If you’ve already done a lot of dynamics processing to individual tracks, adding more processing with mastering plugins could end up being excessive. (To be fair, this is a valid argument for top-down mixing. It’s not my preference, but it’s a technique that could work well for you.)

Studio One has the unique ability to jump between the mastering page and its associated multitrack projects. (I’m astonished that no other DAW has stolen—I mean, been inspired by—this architecture.) If after adding processors in the mastering page you decide individual tracks need changes to their amounts of dynamics processing, that’s easy to do.

Ear Candy: The Final Frontier

Now you have a clean,integratedmix that does justice to the vision you had when tracking the music. Keep an open mind about whether any little production touches could make it even better—an echo that spills over, an abrupt mute, a slight tempo change to help the song breathe (although it’s often best to apply this to the rendered stereo mix, prior to mastering), a tweak of a track’s stereo image—these can add those extra “somethings” that make a mix even more compelling.

Mastering

Mastering deserves its own blog post, because it involves a lot more than just slamming a maximizer on the output bus. If this post gets a good response, I’ll do a follow up on mastering.

FL Studio Meets Studio One

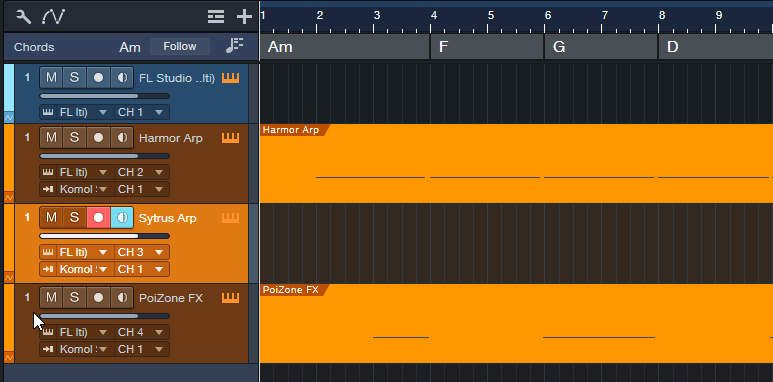

FL Studio is a cool program. Yet when some users see me working with Studio One’s features like comping tools, harmonic editing, the lyrics track, Mix FX, MPE support, and the like, I’ll often hear “wow, I wish FL Studio could do that.” Well, it can…because you can open FL Studio as a multi-output VSTi plug-in within Studio One. Even with the Artist version, you can stream up to 16 individual audio outputs from FL Studio into Studio One’s mixer, and use Studio One’s instrument tracks to control FL Studio’s instruments.

For example, to use Studio One’s comping tools, record into Studio One instead of FL Studio, do your comping, and then mix the audio along with audio from FL Studio’s tracks. With harmonic editing, record the MIDI in Studio One and create a Chord track. Then, use the harmonically edited MIDI data to drive instruments in FL Studio. And there’s something in it for Studio One users, too—this same technique allows using FL Studio like an additional rack of virtual instruments.

The following may seem complicated. But after doing it a few times, the procedure becomes second nature. If you have any questions about this technique, please ask them in the Comments section below.

How to Set Up Studio One for FL Studio Audio Streams

1. Go to Studio One’s Browser, and choose the Instruments tab.

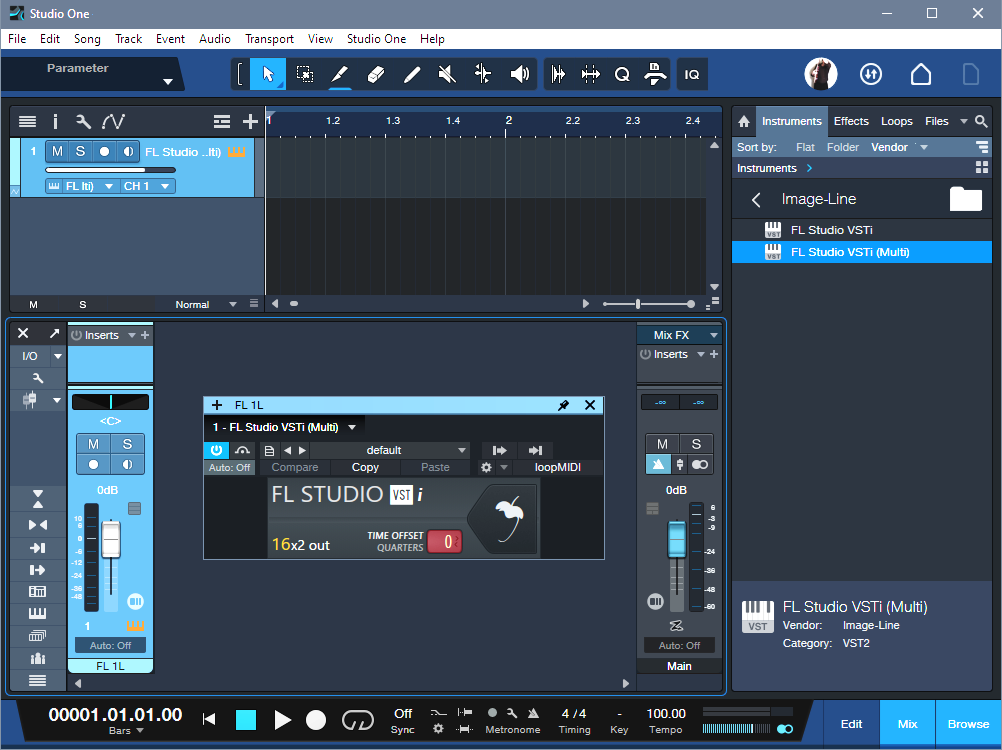

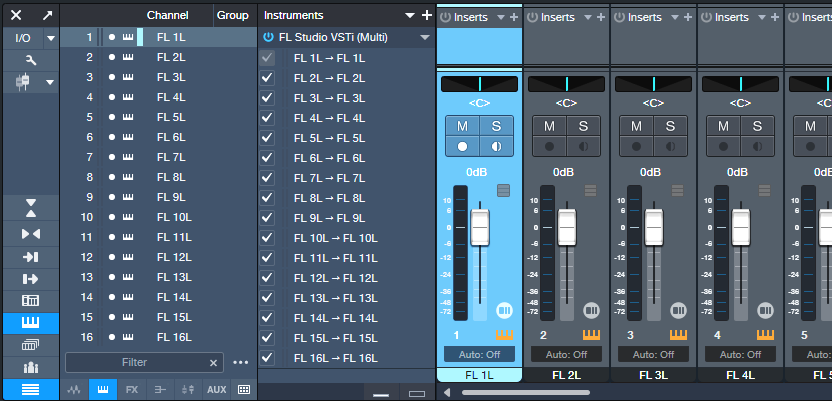

2. Open the Image-Line folder. Drag FL Studio VSTi (Multi) into the Arrange view, like any other virtual instrument. This creates an Instrument track in Studio One, and opens the FL Studio widget (fig. 1).

3. Refer to fig. 2 for the following steps. Click on Studio One’s Keyboard icon toward the left of the Mixer view to open the Instrument rack. You’ll see FL Studio VSTi (Multi).

4. Click the downward arrow to the right of the FL Studio VSTi (Multi) label, and choose Expand. This exposes the 16 FL Studio outputs.

5. Check the box to the left of the inputs you want to use. Checking a box opens a new mixer channel in Studio One.

6. To show/hide mixer channels, click the Channel List icon (four horizontal bars, at the bottom of the column with the Keyboard icon). This opens a list of mixer channels. The dots toggle whether a channel is visible (white dot) or hidden (gray dot).

Assign FL Studio Audio Streams to Studio One

To open the FL Studio interface, click on the Widget’s fruit symbol. Then (fig. 3):

1. In the Mixer, click on the channel with audio you want to stream into Studio One.

2. Click on the Audio Output Target selector in the Mixer’s lower right.

3. From the pop-up menu, select the Studio One mixer channel that will stream the audio.

Note: FL 1 carries a two-channel mixdown of all FL Studio tracks. This is necessary for DAWs that don’t support multi-output VST instruments. To play back tracks only through their individual Studio One channels, turn down Studio One’s FL 1L fader. For similar reasons, if you later want to record individual instrument audio outputs into Studio One, the process is simpler if you avoid using channel FL1 for streaming audio.

Drive FL Studio Instruments from Studio One MIDI Tracks

This feature is particularly powerful because of Studio One’s harmonic editing. Record your MIDI tracks in Studio One, and use the harmonically edited data to drive FL Studio’s instruments. Then, you can assign the instruments to stream their audio outputs into Studio One for further mixing.

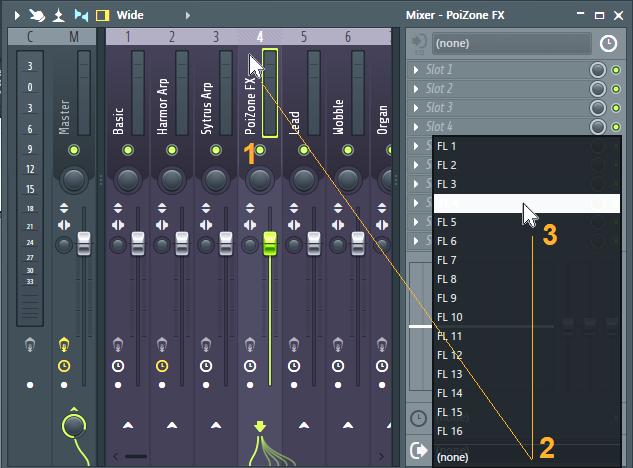

FL Studio defaults to assigning the first 16 Channel Rack positions to the first 16 MIDI channels. These positions aren’t necessarily numbered 1-16, because the Channel Rack positions can “connect” to mixer channels with different numbers. So, insert the instruments you want to use with Studio One in the first 16 Rack Channel positions. Referring to fig. 4:

1. After the FL Studio VSTi has been inserted in Studio One, insert an additional Studio One Instrument track for each FL Studio instrument you want to drive.

2. Set Studio One’s Instrument track output channel to match the desired FL Instrument in the Channel Rack.

3. Any enabled Input Monitor in Studio One will feed MIDI data to its corresponding Rack Channel in FL Studio. To send data to only one Rack Channel, select it in FL Studio. In Studio One, enable the Input Monitor for only the corresponding Rack Channel.

4. Record-enable the Studio One track into which you want to record.

Now you can record MIDI tracks in Studio One, use Harmonic Editing to experiment with different chord progressions, and hear the FL Studio instruments play back through Studio One’s mixer.

Recording the FL Instruments as Audio Tracks in Studio One

Studio One’s Instrument channels aren’t designed for recording. Nor can you render an FL Studio-driven Instrument track to audio, because the instrument isn’t in the same program as the track. So, to record an FL Studio instrument:

1. Add an Audio track in Studio One.

2. Click the Audio track’s Input field. This is the second field above the channel’s pan slider.

3. A pop-up menu shows the available inputs. Unfold Instruments, and choose the instrument you want to record. (Naming Studio One’s Instrument tracks makes choosing the right instrument easier, because you don’t need to remember which instrument is, for example, “FL 5L.”)

4. Record-enable the Audio track, and start recording the track in real time.

About Transport Sync

FL Studio mirrors whatever you do with Studio One’s transport, and follows Studio One’s tempo. This includes Tempo Track changes. You can jump around to different parts of a song, and FL Studio will follow.

Unlike ReWire, though, the reverse is not true. FL Studio’s transport operates independently of Studio One. If you click FL Studio’s Play button, only FL Studio will start playing. This can be an advantage if you want to edit FL Studio without hearing what’s happening in Studio One.

I must admit, ReWire being deprecated was disappointing. I liked being able to use two different programs simultaneously to take advantage of what each one did best. Well, ReWire may be gone—but FL Studio and Studio One get along just fine.

Make Bass “Pop” in Your Mix

Bass has a tough gig. Speakers have a hard time reproducing such low frequencies. Also, the ear is less sensitive to low (and high) frequencies compared to midrange frequencies. Making bass “pop” in a mix, especially at low playback levels, isn’t easy.

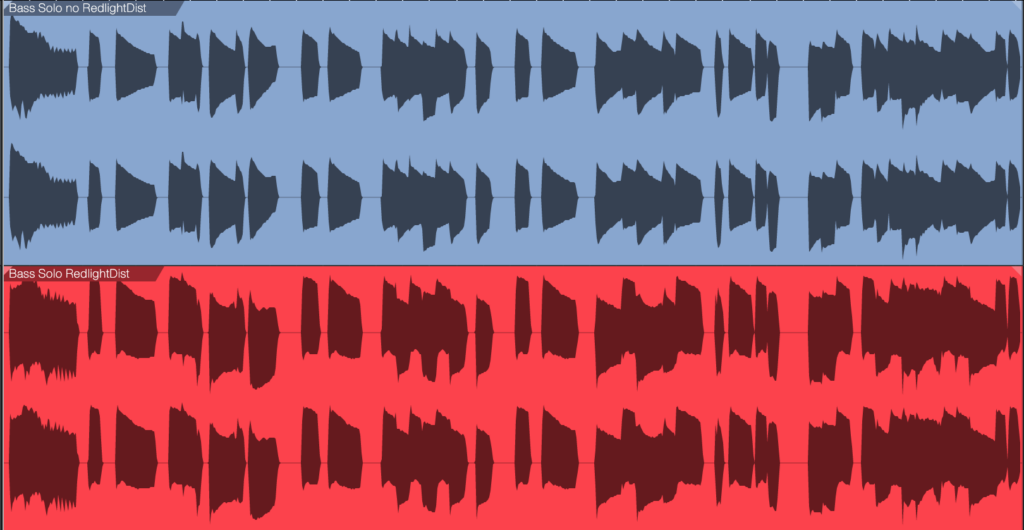

Fortunately, saturating the bass can provide a solution. This type of distortion has two beneficial effects:

- Adds high-frequency harmonics. A bass note with harmonics is easier to hear, even in systems with a compromised bass response and at lower playback levels.

- Raises the bass’s average level. With light saturation, the bass has a higher average level. But this doesn’t have to increase the peak level (fig. 1).

Most bass lines use single notes. So, unlike guitar, saturation doesn’t create nasty intermodulation distortion due to notes interacting with each other. Even with saturation, the bass sounds “clean” in the context of a mix.

The Star of the Show: RedlightDist

Although tape emulation effects are popular for saturating bass, RedlightDist (fig. 2) is all you need. Setting Type to Hard Tube and using a single Stage produces an effect with bass that’s almost indistinguishable from tape emulation plugins. (The Bass QuickStrip tip also includes the RedlightDist, but the preset uses the Splitter. This simpler tip works with Studio One Artist or Professional.)

How to Optimize the Input Level

The saturation amount depends on the input level, not just the settings of the In, Distortion, and Drive controls. The Distortion and Drive settings in fig. 2 work well. At least initially, use the In control to adjust the amount of saturation. If the highest setting doesn’t produce enough saturation, increase the level going into the RedlightDist. If you still want more saturation, increase Drive.

Hearing is Believing!

Make sure you check out these audio examples, because the way RedlightDist affects the overall mix is dramatic. First, listen to the unprocessed bass sound as a reference. All the examples are normalized to ‑6 dB peak levels.

The next example is the saturated sound. But the real payoff is in the final two examples.

The following example plays an excerpt from a song. The bass is not saturated. Listen to it in context with the mix.

The final example uses saturated bass in the song excerpt. Listen to how the bass stands out in the mix, even though its peak level is the same as the previous example.

By using saturation, you can mix the bass lower than you could without saturation, yet the bass sounds equally prominent. This offers two main benefits:

- There’s more low-frequency space for the kick and other instruments.

- Having less low-bass energy frees up more headroom. So, the entire mix can have a higher level, without needing to add compression or limiting.

RedlightDist is a versatile effect. Also try this technique with kick—as well as analog, beatbox-style drum sounds—when you need more punch and pop.

Enhance Your Reverb’s Image

First, an announcement: If you own the eBook “How to Record and Mix Great Vocals in Studio One,” you can download the 2.1 update for free from your PreSonus account. This new version, with 10 chapters and over 200 pages, includes the latest features in Studio One 6. New customers can purchase the eBook from the PreSonus Shop. And now, this week’s tip…

Studio One’s Room Reverb creates realistic ambiance, but sometimes “realistic” isn’t the goal. Widening the reverb’s stereo image outward can give more clarity to sounds panned to center, such as kick, snare, and vocals. Expanding the image also gives a greater sense of width.

This tip covers four ways to widen the reverb’s image. All these techniques insert reverb in an FX Channel. The channels you want to process with reverb feed the FX Channel via Send controls.

#1 Easiest Option: Binaural Pan

Version 6 upgraded the mixer’s panpot to do dual panning or binaural panning, in addition to the traditional balance control function. Click on the panpot, select Binaural from the drop-down menu, and turn up the Width control to widen the stereo image (fig. 1).

If you haven’t upgraded to version 6 yet, then insert the Binaural Pan plug-in after the reverb. Turn up the Binaural Pan’s Width parameter for the same widening effect.

#2 Most Flexible: Mid-Side Processing

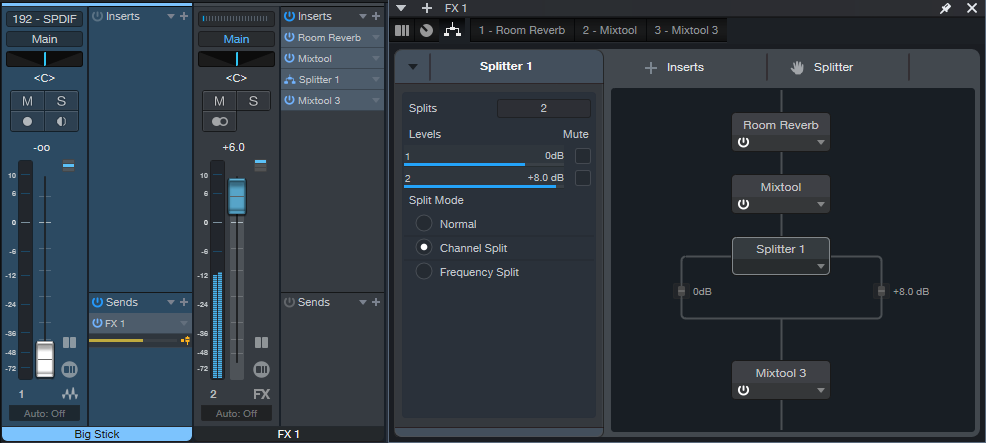

Mid-side processing separates the mid (center) and side (left and right) audio. (For more about mid-side processing, see Mid-Side Processing Made Easy and Ultra-Easy Mid-Side Processing with Artist.) The advantage compared to the Binaural Pan is that you can process the sides or center audio, as well as adjust their levels. This tip uses the Splitter module in Studio One Professional.

In fig. 2, the Room Reverb feeds a Mixtool. Enabling the Mixtool’s MS Transform function encodes stereo audio so that the mid audio appears on the left channel, and the sides audio on the right. The Splitter is in Channel Split mode, so its Level parameters set the levels for the mid audio (Level 1) and sides audio (Level 2). To widen the stereo effect, set Level 2 higher than Level 1. The final Mixtool, also with MS Transform enabled, decodes the signal back into conventional stereo.

#3 Most Natural-Sounding: Dual Reverbs and Pre-Delay

This setup requires two FX Channels, each with a reverb inserted (fig. 3).

To create the widening effect:

1. Edit one reverb for the sound you want.

2. Pan its associated FX Channel full left.

3. Copy the reverb into the other channel, and pan its associated FX Channel full right.

4. To widen the stereo image, increase or decrease the pre-delay for one of the reverbs. The sound will be similar to the conventional reverb sound, but with a somewhat wider, yet natural-sounding, stereo image.

Generally, copying audio to two channels and delaying one of them to create a pseudo-doubling effect can be problematic. This is because collapsing a mix to mono thins the sound due to phase cancellation issues. However, the audio generated by reverb is diffuse enough that collapsing to mono doesn’t affect the sound quality much (if at all).

#4 Most Dramatic: Dual Reverbs with Different Algorithms

This provides a different and dramatic sense of width. Use the same setup as the previous tip, but don’t increase the pre-delay on one of the reverbs. Instead, change the Type algorithm (fig. 4).

If you change one of the reverbs to a smaller room size, you’ll probably need to increase the Size and Length to provide a balance with the reverb that has a bigger room size. Conversely, if you change the algorithm to a larger room, decrease Size and Length. You may also need to vary the FX Channel levels if the stereo image tilts to one side.

Free! Three Primo Piano Presets

Let’s transform your acoustic piano instrument sounds—with effects that showcase the power of Multiband Dynamics. Choose from two download links at the end of this post:

- FX Chains. These include the FX part of the Instrument+FX Presets (see next), so you can use them with any acoustic piano virtual instrument. You may want to bypass any effects added to the instrument (if present) so that the FX Chains have their full intended effect. Then, you can try adding the effects back in to see if they improve the sound further.

- Instrument+FX Presets. These are based on the PreSonus Studio Grand piano SoundSet. This SoundSet comes with a Sphere membership, or is optional-at-extra-cost for Studio One Artist or Professional.

Remember, presets that incorporate dynamics will sound as intended only with suitable input levels. These presets are designed for relatively high input levels, short of distortion. If the presets don’t seem to have any effect, increase the piano’s output level. Or, add a Mixtool between the channel input and first effect to increase the level feeding the chain.

CA Studio Grand Beautiful Concert

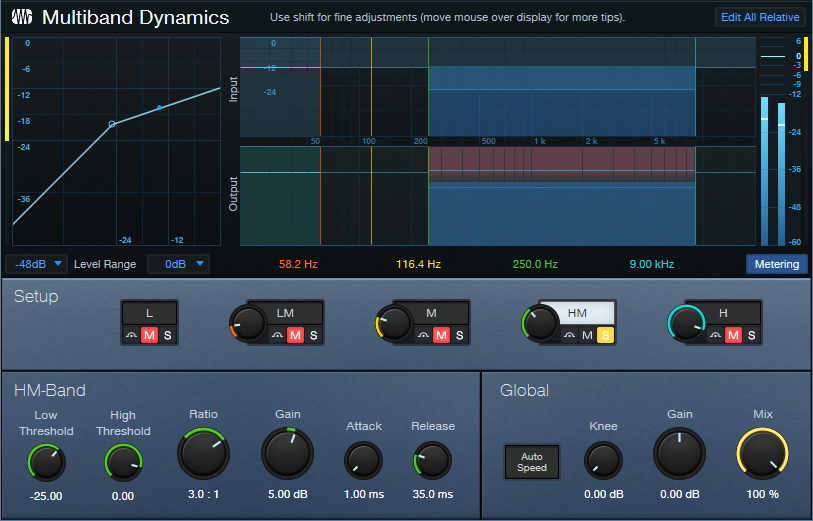

Before going into too different a direction, let’s create a gorgeous solo piano sound. In this preset, the Multiband Dynamics has two functions. The Low band compresses frequencies below 100 Hz (fig. 1), to increase the low end’s power.

The HM stage is active, but doesn’t compress. It acts solely as EQ. By providing an 8 dB boost from 2.75 kHz to 8 kHz, this stage adds definition and “air.” The remaining effects include Room Reverb for ambiance and Binaural Pan to widen the instrument’s stereo image. Let’s listen to some Debussy.

CA Studio Grand Funky

Although not intended as an emulation, this preset is inspired by the punchy, funky tone of the Wurlitzer electric pianos produced from the 50s to the 80s. The Multiband Dynamics processor solos, and then compresses, the frequency range from 250 Hz to 9 kHz (fig. 2).

The post-instrument effects include a Pro EQ3 to impart some “honk” around 1.6 kHz, and the RedlightDist for mild saturation. X-Trem is optional for adding vintage tremolo FX (the audio example includes X-Trem).

CA Studio Grand Smooth

This smooth, thick piano sound is ideal when you want the piano to be present, but not overbearing. The Multiband Dynamics has only one function: provide fluid compression from 320 Hz to 3.50 kHz (fig. 3). This covers the piano’s “meat,” while leaving the high and low frequencies alone.

The audio example highlights how the compression increases sustain on held notes.

Hey—Up for More?

These presets just scratch the surface of how multiband dynamics and other processors can transform instrument sounds. Would you like more free instrument presets in the future? Let me know in the comments section below.

Download the Instrument+FX Presets here:

Download the FX Chains here:

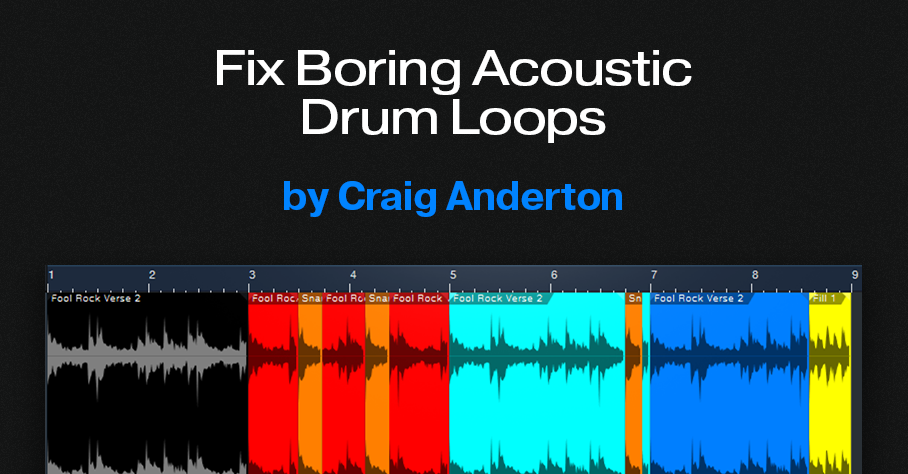

Fix Boring Acoustic Drum Loops

Acoustic drum loops freeze-dry a drummer’s playing—so, what you hear is what you get. What you get. What you get. What you get. What you get. What you get.

Acoustic drums played by humans are inherently expressive:

- Human drummers don’t play the same hits exactly the same way twice.

- Drummers play with subtlety. Not all the time, of course. But ghost notes, dynamic extremes, and slight timing shifts are all in the drummer’s toolkit.

Repetitive loops cue listeners that a drum part has been constructed, not played. So, let’s explore techniques that make acoustic drum loops livelier and more credible. (We’ll cover tips for MIDI drums in a future post.)

Loop Rearrangement

Simply shuffling a few of a loop’s hits within a loop may be all that’s needed to add interest. The following audio examples use a looped verse, and a fill, from Studio One’s Sound Sets > Acoustic Drum Kits and Loops > Fool Rock > Verse 132 BPM.

The first clip plays the Fool Rock Verse 2 loop four times (8 measures), without changes. It quickly wears out its welcome.

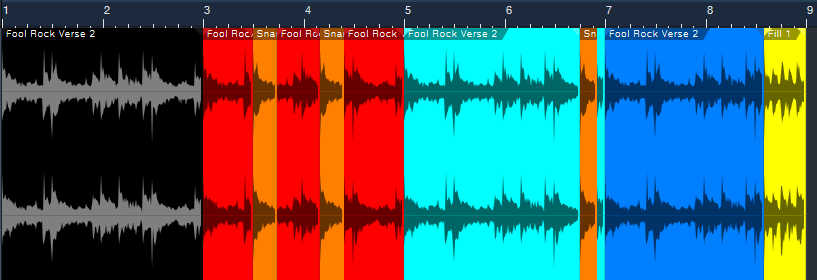

We can improve the loop simply by shuffling one snare hit, and adding part of a fill (fig. 1).

The black loop at the beginning is the original two-measure loop. It repeats three more times, although there are some changes. The red loop is the same, except that a copy of its first snare hit (colored orange) replaces a kick hit later in the loop.

The light blue loop uses the same orange snare hit to provide an accent just before the loop’s end. The copied snare sounds different from the snare that preceded it, which also increases realism.

The dark blue loop is the same as the original, except that it adds the last half of the file Fool Rock Fill 1 at the end.

Listen to how even these minor changes enhance the drum part.

Cymbal Protocol

The Fool Rock folder has two different versions of the Verse 2 loop. One has a crash at the beginning, one doesn’t. I’m not a fan of loops with a cymbal on the first beat. With loops that are only two measures long, repeating the loop with the cymbal results in too many cymbals for my taste. Besides, drummers don’t use cymbals just to mark the downbeat, but to add off-beat hits and accents.

The loops in fig. 1 use the version without a crash. If we replace the first loop with the version that has a crash, the cymbal doesn’t become annoying because it’s followed by three crashless loops. But it’s even better if you overdub cymbals to add accents.

The final audio clip’s first two measures use the Fool Rock loop that includes a crash on the downbeat. However, there are now two cymbal tracks in addition to the loop. A hit from each cymbal creates a transition between the end of the second loop, and the beginning of the third loop.

Loop Ebb and Flow

In this kind of 8-measure figure with 2-measure loops, it’s common for the first and third loops to be similar or even identical. The second loop will add some variations, and the fourth loop will have a major variation at the end to “wrap up” the figure. This natural ebb and flow mimics how many drummers create parts that “breathe.” Keep this in mind as you strive for more vibrant, life-like drum parts.

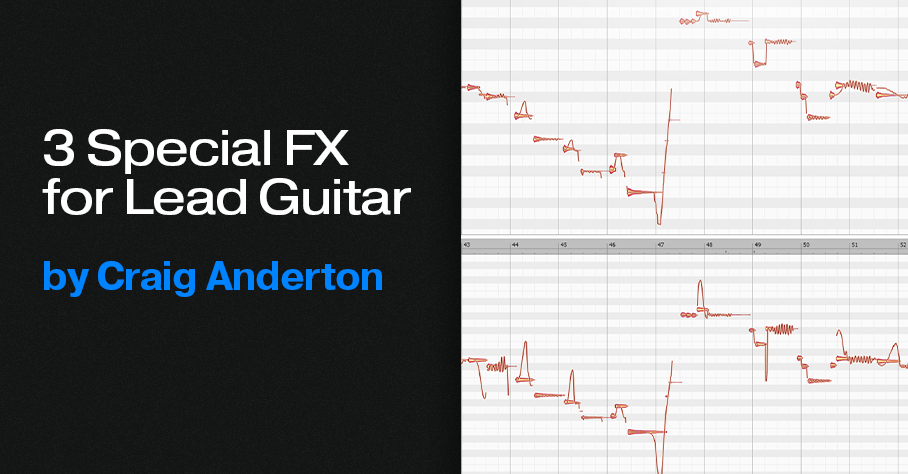

3 Special FX for Lead Guitar

Melodyne can do much more than vocal pitch correction. Previous tips have covered how to do envelope-controlled flanging and polyphonic guitar-to-MIDI conversion with Melodyne Essential. The following techniques add mind-bending effects to lead guitar, and don’t involve pitch correction per se.

However, all three tips require the Pitch Modulation and Pitch Drift tools. These tools are available only in versions above Essential (Assistant, Editor, and Studio). A Melodyne 5 trial version incorporates these versions. The trial period is 30 days, during which there are no operational limitations.

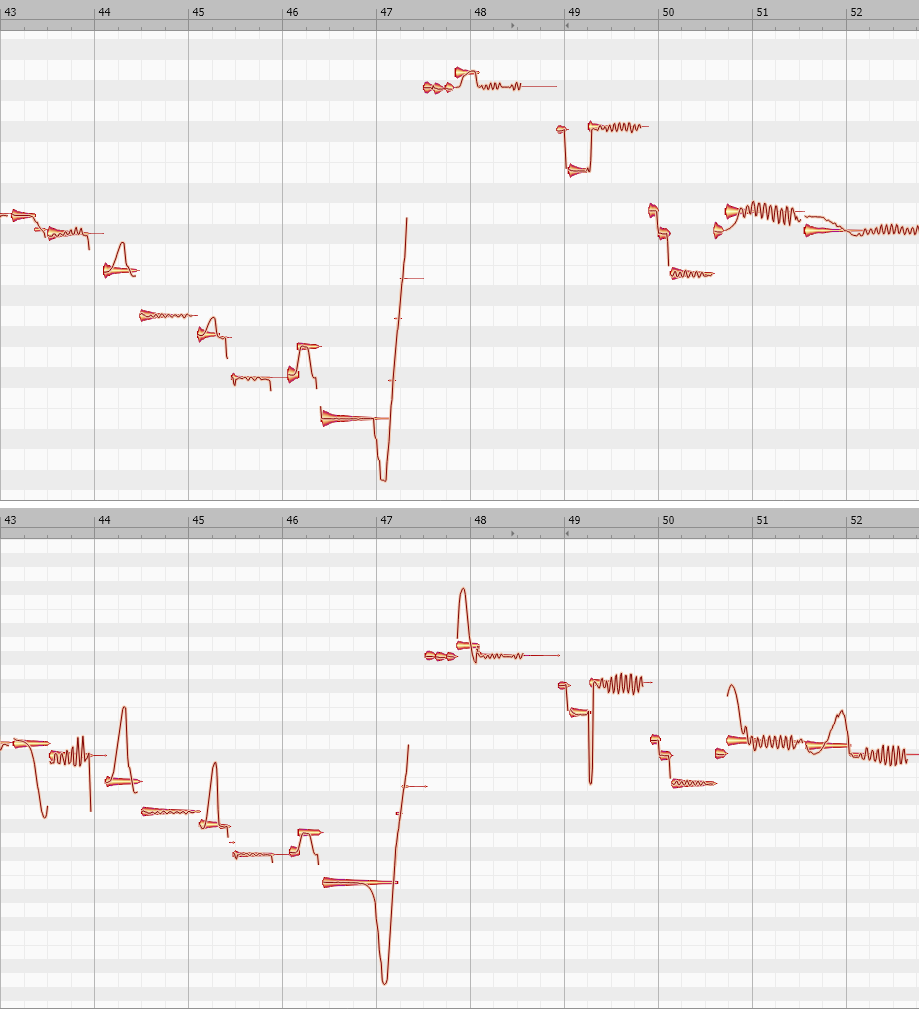

Fig. 1 shows a lead guitar line, before and after processing, that incorporates all three processing techniques. This is the melody line used in the before-and-after audio example.

Vibrato Emphasis/De-Emphasis

To increase or decrease the vibrato amount, use Melodyne’s Pitch Modulation tool. In fig. 1, the increased vibrato effect is most visually obvious at the end of measures 43, 49, and 52. To change the amount of vibrato, click on the blob with the Pitch Modulation tool. While holding the mouse button down, drag up (more vibrato) or down (less vibrato). Extreme vibrato amounts can sound like whammy bar-based vibrato.

Synthesized Slides

Notes with moderate bending can bend pitch up or down over as much as several semitones:

1. Select the Pitch Modulation tool.

2. Click on a blob that incorporates a moderate bend.

3. While holding the mouse button down, drag up to increase the bend up range. With most notes, you can invert the bend by dragging down.

Because a synthesized bend can cover a wider pitch range than physical strings, this effect sounds like you’re using a slide on your finger. In fig. 1, see measures 44 and 45 for examples of upward bends. The end of measure 47 shows an increased downward bend.

For the most predictable results, the note you want to bend should:

- Establish its pitch before you start bending. If you start a note with a bend, Melodyne may think the bent pitch is the correct one. This complicates increasing the amount of bend.

- Have silence (however brief) before the note starts. If there’s no silence, before opening the track in Melodyne, edit the guitar track to create a short silent space before the note.

Slide Up to Pitch, or Slide Down to Pitch

This is an unpredictable technique, but when it works, note transitions acquire a “smooth” character. In fig. 1, note the difference between the modified and unmodified pitch slides in measures 43, 44, 47, 49, 50, and 51.

To add this kind of slide, click on a blob with the Pitch Drift tool, and then drag up or down. The slide’s character depends on what happens during, before, and after the note. Sometimes using Pitch Modulation to initiate a slide, and Pitch Drift to modify the slide further, works best. Sometimes the reverse is true.

This is a trial-and-error process. With experience, you’ll be able to recognize which blobs are good candidates for slides.

The ”Hearing is Believing” Audio Examples

Guitar Solo.mp3 is an isolated guitar solo that uses none of these techniques.

Guitar Solo with Melodyne.mp3 uses all of these techniques on various notes.

To hear these techniques in a musical context that shows how they can add a surprising amount of emotion to a track,this link takes you to a guitar solo in one of my songs on YouTube. The solo uses all three effects.

MIDI-Accelerated Delay Effects

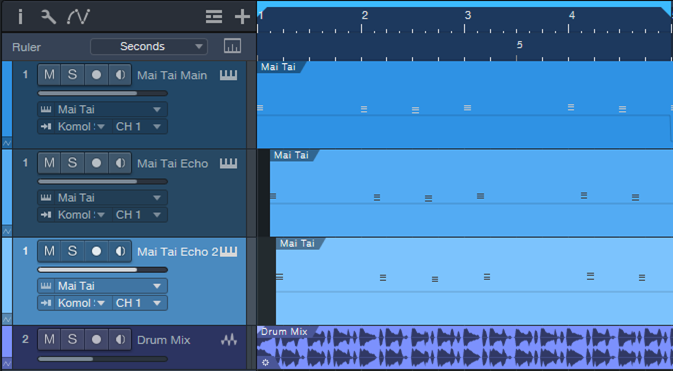

Synchronized echo effects, particularly dotted eighth-note delays (i.e., intervals of three 16th notes), are common in EDM and dance music productions. The following audio example applies this type of Analog Delay effect to Mai Tai.

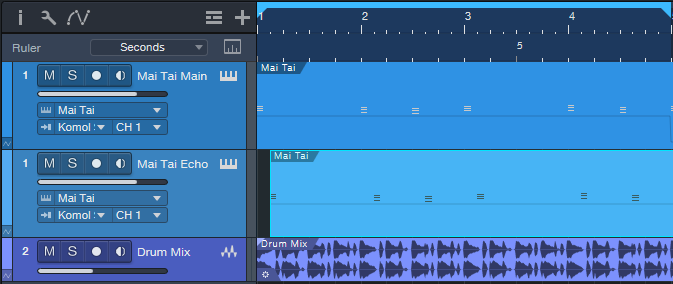

However, you can also create echoes for virtual instruments by copying, offsetting, and editing an instrument track’s MIDI note data. In the next audio example, the same MIDI track has been copied and delayed by an 8th note (fig. 1).

In the original instrument track, the MIDI note data velocities are around 127. These values push the filter cutoff close to maximum. Reducing the copied Echo track’s velocity to about half creates notes that don’t open up the Mai Tai’s filter as much. So, the MIDI-generated delay’s timbre is different.

The next track copies the original track again. But this time, the notes are transposed up an octave, and offset by a dotted eighth-note compared to the original (fig. 2). For this track, the velocities are about halfway from the maximum velocity.

Now we have our “MIDI-accelerated” echo effect for the virtual instrument’s notes, which are still processed by the original Analog Delay effect. The final audio example highlights how the combination of analog delay and MIDI note delay evolves over 8 measures.

These audio examples are only the start of what you can do with MIDI echo by offsetting, transposing, and altering note velocities. You can even feed the different MIDI tracks into different virtual instruments, and create amazing polyrhythms. But why stop there? Hopefully, this blog post will inspire you to come up with your own signature variations on this technique.

Friday Tips in the Real World: the Sequel

This is a follow-up to the Friday Tips in the Real World blog post that appeared in 2020. It was well-received, so I figured it was time for an update.

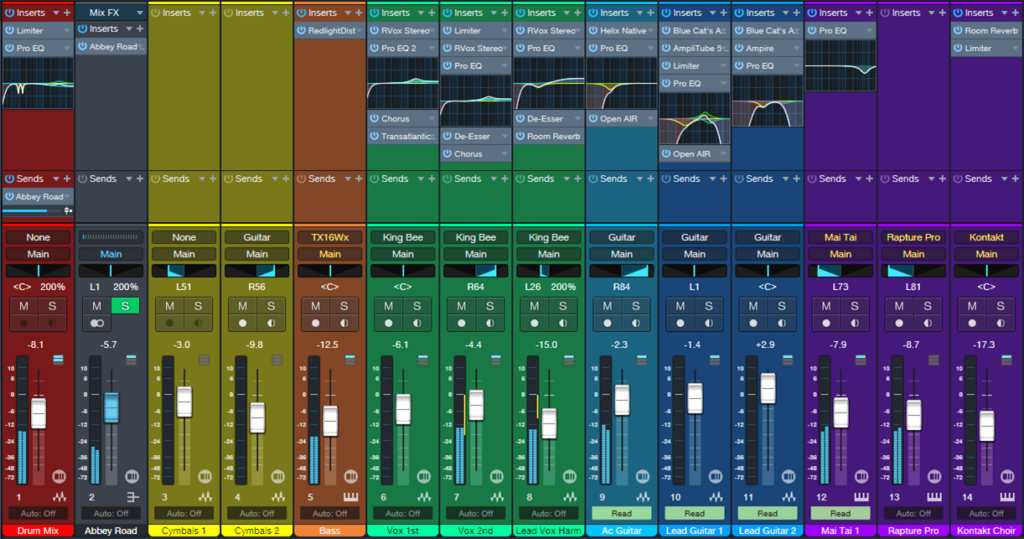

Although many of the Friday Tips include an audio example of how a tip affects the sound, that’s different from the real-world context of a musical production. So, this blog post highlights how selected tips were used in my recent music/video project, Unconstrained. The project was recorded, mixed, and mastered entirely in Studio One.

As you might expect, the workflow-related tips were used throughout, as were some of the audio tips. For example, all the vocals used the Better Vocals with Phrase-by-Phrase Normalization technique, and all the guitar parts followed the Amp Sims: Garbage In, Garbage Out tip. The Chords Track and Project Page updates were crucial to the entire project as well.

The links take you to specific parts of the songs that showcase the tips, accompanied by links to the relevant Friday Tip blog post. If you’re curious about specific production techniques used in the project, whether they’re included in this post or not, feel free to ask questions in the comments section.

One reader’s comment for the Lead Guitar Editing Hack blog post mentioned how useful this technique is. If you missed it the first time, here’s what it sounds like applied to a solo. Attenuating the attack gives the melody line a synth-like, otherworldly sound. Incidentally, if you back up the video to 6:20, the cello sounds are from Presence. I tried the “industry standard” orchestra programs, but I liked the Presence cellos more. Also, I used the “time trap” technique from the Fun with Tempo Tracks post to slow the cellos slightly before going full tilt into the solo.

The Magic Stereo blog post described a novel way to add motion to rhythm parts, like piano and guitar. This excerpt uses that technique to move the guitar in stereo, but without conventional panning. Later on in the song, the drums use Harmonic Editing to give a sense of pitch. The post Melodify Your Beats describes this technique. But in this song, the white noise wasn’t needed because the drums had enough of a frequency range so that harmonic editing worked well.

I wrote about the EDM-style “pumping” effect in the post “Pump” Your Pads and Power Chords, and it goes most of the way through this song. The reason why I chose this section is because the solo uses Presence, which I think may be underrated by some people.

Another topic that’s dear to my heart is blues harmonica, and it loves distortion—as described in the blog post Blues Harmonic FX Chain. It’s wonderful how amp sims can turn the thin, reedy sound of a harmonica into something with so much power it’s almost like a brass section. However, note that this example uses a revised version of the original FX Chain, based on Ampire. (The revised version is described in The Huge Book of Studio One Tips and Tricks.)

The blog post Studio One’s Session Bass Player generated a lot of comments. But does the technique really work? Well, listen to this example and decide for yourself. I needed a scratch bass part but it ended up being so much like what I wanted that I made only a couple tweaks…done. For a guitar solo in the same song, I tried a bunch of wah pedals but the one that worked best was Ampire’s.

I still think Studio One’s ability to do polyphonic MIDI guitar courtesy of Melodyne (even the Essential version) is underrated. This “keyboard” part uses Mai Tai driven by MIDI guitar. The MIDI part was derived from the guitar track that’s doubling the Mai Tai. For more information, see the blog post Melodyne Essential = Polyphonic MIDI Guitar. Incidentally, except for the sampled bass and choir, all the keyboard sounds were from Mai Tai. If you’ve mostly been using third-party synths, spend some time re-acquainting yourself with Mai Tai and Presence. They can really deliver.

As the post Synthesize OpenAIR Reverb Impulses in Studio One showed, it’s easy to create your own reverb impulses for OpenAIR. In this excerpt, the female background vocals, male harmony, and harmonica solo all used impulses I created using this technique. (The only ambience on the lead vocal was the Analog Delay). Custom impulses are also used throughout Vortex and the subsequent song, What Really Matters (which also uses the Lead Guitar Hack for the solo).

I’m just getting started with my project for 2023, and it’s already generating some new tips that you’ll be seeing in the weeks ahead. I hope you find them helpful! Meanwhile, here’s the link to the complete Unconstrained project.

The Surprising Channel Strip EQ

Announcement: Version 1.4.1 of The Huge Book of Studio One Tips and Tricks is a free update to owners of previous versions. Simply download the book again from your PreSonus account, and it will be the most recent version. This is a “hotfix” update for improved compatibility with Adobe Acrobat’s navigation functions. The content is the same as version 1.4.

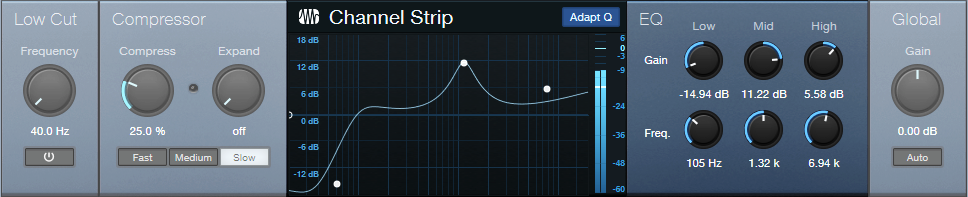

Studio One has a solid repertoire of EQs: the Fat Channel EQs, Pro EQ3, Ampire’s Graphic Equalizer, and the Autofilter. Even the Multiband Dynamics can serve as a hip graphic EQ. With this wealth of EQs, it’s potentially easy to overlook the Channel Strip’s EQ section (fig. 1). Yet it’s significantly different from the other EQs.

Back to the 60s

In the late 60s, Saul Walker (API’s founder) introduced the concept of “proportional Q” in API equalizers. To this day, engineers praise API equalizers for their “musical” sound, and much of this relates to proportional Q.

The theory is simple. At lower gains, the bandwidth is wider. At higher gains, it becomes narrower. This is consistent with how we often use EQ. Lower gain settings are common for tone-shaping. Increasing the gain likely means you want a more focused effect.

The concept works similarly for cutting. If you’re applying a deep cut, you probably want to solve a problem. A broad cut is more about shaping tone. Also, because cutting mirrors the response of boosting, proportional Q equalizers make it easy to “undo” equalization settings. For example, if you boosted drums around 2 kHz by 6 dB, cutting by 3 dB produces the same curve as if the drums had originally been boosted by 3 dB.

Proportional Q also works well with vocals and automation. For less intense parts, add a little gain in the 2-4 kHz range. When the vocal needs to cut through, raise the gain to increase the resonance and articulation.

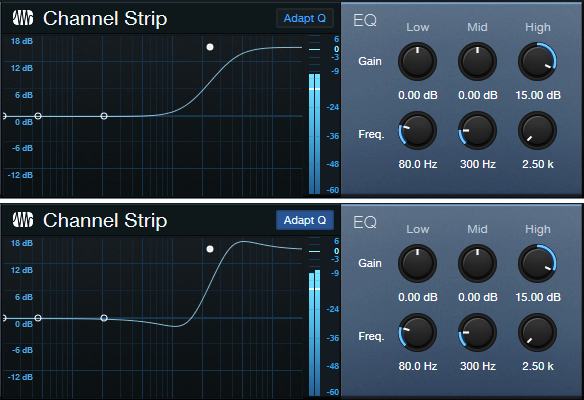

The Channel Strip’s Adapt Q button converts the response to proportional Q. Let’s look at how proportional Q affects the various responses.

High and Low Stages

The High band is a 12 dB/octave high shelf. In fig. 2, the Boost is +15 dB, at a frequency of 2.5 kHz. The top image shows the EQ without Adapt Q. The lower image engages Adapt Q, which increases the shelf’s resonance.

Fig. 3 shows what happens with 6 dB of gain. With Adapt Q enabled, the Q is actually less than the corresponding amount of Q without Adapt Q.

Cutting flips the curve vertically, but the shape is the same. With the Low shelf filter, the response is the mirror image of the High shelf.

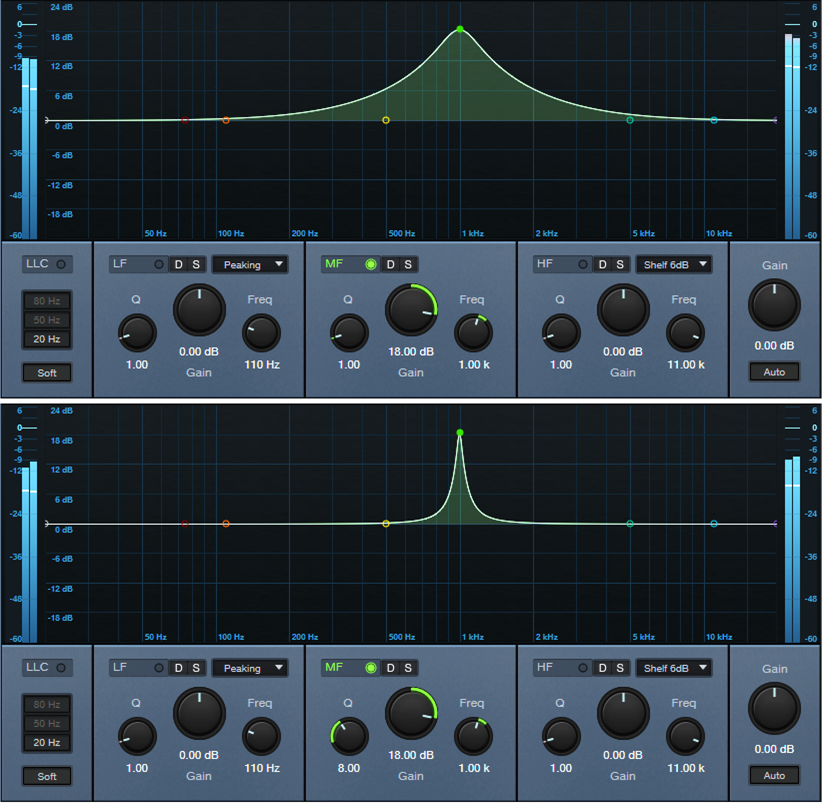

Midrange Stage

The Midrange EQ stage has variable Gain and Frequency. There’s no Q control, but the filter works with Adapt Q to increase Q with more gain or cut (fig. 4).

With 6 dB Gain, the Q is essentially the same, regardless of the Adapt Q setting (fig. 5).

Finally, another Adapt Q characteristic is that the midrange section’s slope down from either side of the peak (called the “skirt”) hits the minimum amount of gain at the same upper and lower frequencies, regardless of the gain. This is different from a traditional EQ like the Pro EQ3, where the skirt narrows with more Q (fig. 6).

Perhaps best of all, the Channel Strip draws very little CPU power. So, if you need more stages of EQ, go ahead and insert several Channel Strips in series, or in parallel using a Splitter or buses. And don’t forget—the Channel Strip also has dynamics 😊!