Author Archives: Craig Anderton

Into the Archives, Part 2

After last week’s thrilling cliff-hanger about how to preserve your WAV files for future generations, let’s look at how to export all your stereo audio tracks and have them incorporate effects processing, automation, level, and panning. There are several ways to do this; although you can drag files into a Browser folder, and choose Wave File with rendered Insert FX, Studio One’s feature to save stems is much easier and also includes any effects added by effects in Bus and FX Channels. (We’ll also look at how to archive Instrument tracks.)

Saving as stems, where you choose individual Tracks or Channels, makes archiving processed files a breeze. For archiving, I choose Tracks because they’re what I’ll want to bring in for a remix. For example, if you’re using an instrument where multiple outputs feed into a stereo mix, Channels will save the mix, but Tracks will render the individual Instrument sounds into their own tracks.

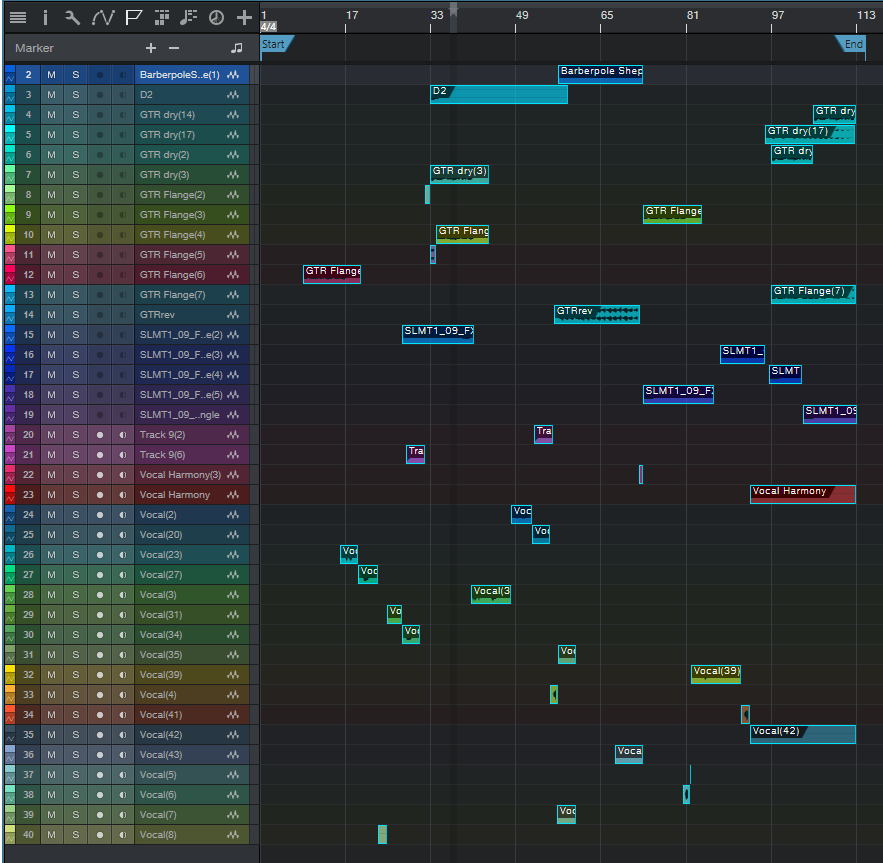

When you export everything as stems, and bring them back into an empty Song, playback will sound exactly like the Song whose stems you exported. However, note that saving as stems does not necessarily preserve the Song’s organization; for example, tracks inside a folder track are rendered as individual tracks, not as part of a folder. I find this preferable anyway. Also, if you just drag the tracks back into an empty song, they’ll be alphabetized by track name. If this is an issue, number each track in the desired order before exporting.

SAVING STEMS

Select Song > Export Stems. Choose whether you want to export what’s represented by Tracks in the Arrange view, or by Channels in the Console. Again, for archiving, I recommend Tracks (Fig. 1).

Figure 1: The Song > Export Stems option is your friend.

If there’s anything you don’t want to save, uncheck the box next to the track name. Muted tracks are unchecked by default, but if you check them, the tracks are exported properly, and open unmuted.

Note that if an audio track is being sent to effects in a Bus or FX Channel, the exported track will include any added effects. Basically, you’ll save whatever you would hear with Solo enabled. In the Arrange view, each track is soloed as it’s rendered, so you can monitor the archiving progress as it occurs.

In Part 1 on saving raw WAV files, we noted that different approaches required different amounts of storage space. Saving stems requires the most amount of storage space because it saves all tracks from start to end (or whatever area in the timeline you select), even if a track-only has a few seconds of audio in it. However, this also means that the tracks are suitable for importing into programs that don’t recognize Broadcast WAV Files. Start all tracks from the beginning of a song, or at least from the same start point, and they’ll all sync up properly.

WHAT ABOUT THE MAIN FADER SETTINGS?

Note that the tracks will be affected by your Main fader inserts and processing, including any volume automation that creates a fadeout. I don’t use processors in the Main channel inserts, because I reserve any stereo 2-track processing for the Project page (hey, it’s Studio One—we have the technology!). I’d recommend bypassing any Main channel effects, because if you’re going to use archived files for a remix, you probably don’t want to be locked in to any processing applied to the stereo mix. I also prefer to disable automation Read for volume levels, because the fade may need to last longer with a remix. Keep your options open.

However, the Main fader is useful if you try to save the stems and get an indication that clipping has occurred. Reduce the Main fader by slightly more than the amount of clipping (e.g., if the warning says a file was 1 dB over, lower the Main channel fader by -1.1 dB). Another option would be to isolate the track(s) causing the clipping and reduce their levels; but reducing the Main channel fader maintains the proportional level of the mixed tracks.

SAVING INSTRUMENT AUDIO

Saving an Instrument track as a stem automatically renders it into audio. While that’s very convenient, you have other options.

When you drag an Instrument track’s Event to the Browser, you can save it as a Standard MIDI File (.mid) or as a Musicloop feature (press Shift to select between the two). Think of a Musicloop, a unique Studio One feature, as an Instrument track “channel strip”—when you bring it back into a project, it creates a Channel in the mixer, includes any Insert effects, zeroes the Channel fader, and incorporates the soft synth so you can edit it. Of course, if you’re collaborating with someone who doesn’t have the same soft synth or insert effects, they won’t be available (that’s another reason to stay in the Studio One ecosystem when collaborating if at all possible). But, you’ll still have the note events in a track.

There are three cautions when exporting Instrument track Parts as Musicloops or MIDI files.

- The Instrument track Parts are exported as MIDI files, which aren’t (yet) time-stamped similarly to Broadcast WAV Files. Therefore, the first event starts at the song’s beginning, regardless of where it occurs in the Song.

- Mutes aren’t recognized, so the file you bring back will include any muted notes.

- If there are multiple Instrument Parts in a track, you can drag them into the Browser and save them as a Musicloop. However, this will save a Musicloop for each Part. You can bring them all into the same track, one a time, but then you have to place them properly. If you bring them all in at once, they’ll create as many Channels/Tracks as there are Instrument Parts, and all Parts will start at the Song’s beginning…not very friendly.

The bottom line: Before exporting an Instrument track as a Musicloop or MIDI file, I recommend deleting any muted Parts, selecting all Instrument Parts by typing G to create a single Part, then extending the Part’s start to the Song’s beginning (Fig. 2).

Figure 2: The bottom track has prepped the top track to make it stem-export-friendly.

You can make sure that Instrument tracks import into the Song in the desired placement, by using Transform to Audio Track. As mentioned above, it’s best to delete unmuted sections, and type G to make multiple Parts into a single Part. However, you don’t need to extend the track’s beginning.

- Right-click in the track’s header, and select Transform to Audio Track.

- Drag the resulting audio file into the Browser. Now, the file is a standard Broadcast WAV Format file.

- When you drag the file into a Song, select it and choose Edit > Move to Origin to place it properly on the timeline.

However, unlike a Musicloop, this is only an audio file. When you bring it into a song, the resulting Channel does not include the soft synth, insert effects, etc.

Finally…it’s a good idea to save any presets used in your various virtual instruments into the same folder as your archived tracks. You never know…right?

And now you know how to archive your Songs. Next week, we’ll get back to Fun Stuff.

Safety First: Into the Archives, Part 1

I admit it. This is a truly boring topic.

You’re forgiven if you scoot down to something more interesting in this blog, but here’s the deal. I always archive finished projects, because remixing older projects can sometimes give them a second life—for example, I’ve stripped vocals from some songs, and remixed the instrument tracks for video backgrounds. Some have been remixed for other purposes. Some really ancient songs have been remixed because I know more than I did when I mixed them originally.

You can archive to hard drives, SSDs, the cloud…your choice. I prefer Blu-Ray optical media, because it’s more robust than conventional DVDs, has a rated minimum shelf life that will outlive me (at which point my kid can use the discs as coasters), and can be stored in a bank’s safe deposit box.

Superficially, archiving may seem to be the same process as collaboration, because you’re exporting tracks. However, collaboration often occurs during the recording process, and may involve exporting stems—a single track that contains a submix of drums, background vocals, or whatever. Archiving occurs after a song is complete, finished, and mixed. This matters for dealing with details like Event FX and instruments with multiple outputs. By the time I’m doing a final mix, Event FX (and Melodyne pitch correction, which is treated like an Event FX) have been rendered into a file, because I want those edits to be permanent. When collaborating, you might want to not render these edits, in case your collaborator has different ideas of how a track should sound.

With multiple-output instruments, while recording I’m fine with having all the outputs appear over a single channel—but for the final mix, I want each output to be on its own channel for individual processing. Similarly, I want tracks in a Folder track to be exposed and archived individually, not submixed.

So, it’s important to consider why you want to archive, and what you will need in the future. My biggest problem when trying to open really old songs is that some plug-ins may no longer be functional, due to OS incompatibilities, not being installed, being replaced with an update that doesn’t load automatically in place of an older version, different preset formats, etc. Another problem may be some glitch or issue in the audio itself, at which point I need a raw, unprocessed file for fixing the issue before re-applying the processing.

Because I can’t predict exactly what I’ll need years into the future, I have three different archives.

- Save the Studio One Song using Save To a New Folder. This saves only what’s actually used in the Song, not the extraneous files accumulated during the recording process, which will likely trim quite a bit of storage space compared to the original recording. This will be all that many people need, and hopefully, when you open the Song in the future everything will load and sound exactly as it did when it was finished. That means you won’t need to delve into the next two archive options.

- Save each track as a rendered audio WAV file with all the processing added by Studio One (effects, levels, and automation). I put these into a folder called Processed Tracks. Bringing them back into a Song sounds just like the original. They’re useful if in the future, the Song used third-party plug-ins that are no longer compatible or installed—you’ll still have the original track’s sound available.

- Save each track as a raw WAV file. These go into a folder named Raw Tracks. When remixing, you need raw tracks if different processing, fixes, or automation is required. You can also mix and match these with the rendered files—for example, maybe all the rendered virtual instruments are great, but you want different vocal processing.

Exporting Raw Wave Files

In this week’s tip, we’ll look at exporting raw WAV files. We’ll cover exporting files with processing (effects and automation), and exporting virtual instruments as audio, in next week’s tip.

Studio One’s audio files use the Broadcast Wave Format. This format time-stamps a file with its location on the timeline. When using any of the options we’ll describe, raw (unprocessed) audio files are saved with the following characteristics:

- No fader position or panning (files are pre-fader)

- No processing or automation

- Raw files incorporate Event envelopes (i.e., Event gain and fades) as well as any unrendered Event FX, including Melodyne

- Muted Events are saved as silence

Important: When you drag Broadcast WAV Files back into an empty Song, they won’t be aligned to their time stamp. You need to select them all, and choose Edit > Move to Origin.

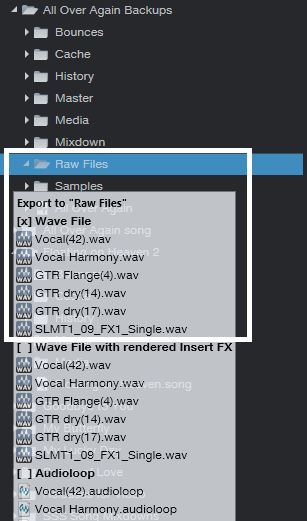

The easiest way to save files is by dragging them into a Browser folder. When the files hover over the Browser folder (Fig. 1), select one of three options—Wave File, Wave File with rendered Insert FX, or Audioloop—by cycling through the three options with the QWERTY keyboard’s Shift key. We’ll be archiving raw WAV files, so choose Wave File for the options we’re covering.

Figure 1: The three file options available when dragging to a folder in the Browser are Wave File, Wave File with rendered Insert FX, or Audioloop.

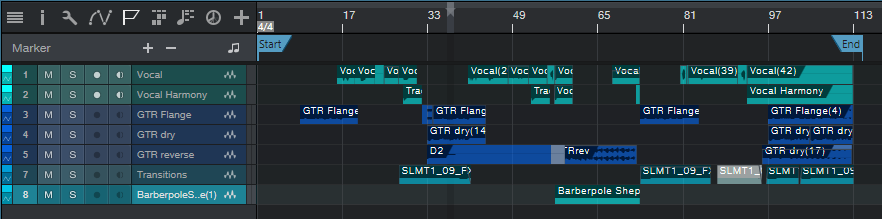

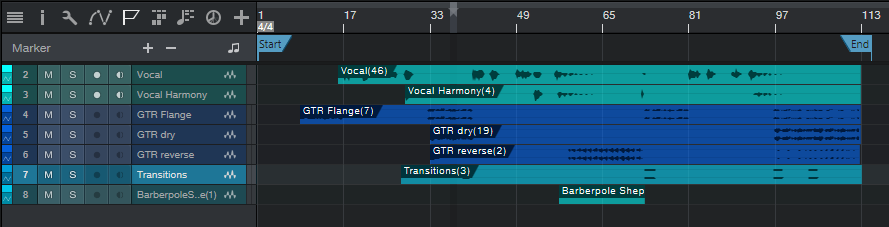

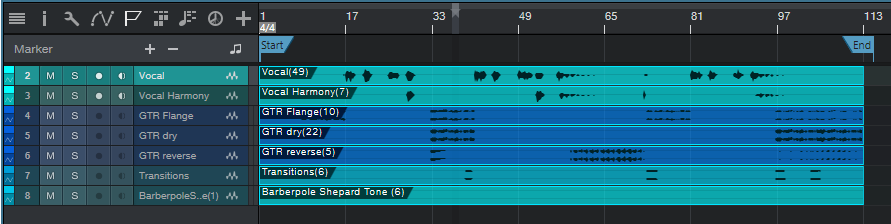

As an example, Fig. 2 shows the basic Song we’ll be archiving. Note that there are multiple Events, and they’re non-contiguous—they’ve been split, muted, etc.

Figure 2: This shows the Events in the Song being archived, for comparison with how they look when saving, or reloading into an empty Song.

Option 1: Fast to prepare, takes up the least storage space, but is a hassle to re-load into an empty Song.

Select all the audio Events in your Song, and then drag them into the Browser’s Raw Tracks folder you created (or whatever you named it). The files take up minimal storage space, because nothing is saved that isn’t data in a Song. However, I don’t recommend this option, because when you drag the stored Events back into a Song, each Event ends up on its own track (Fig. 3). So if a Song has 60 different Events, you’ll have 60 tracks. It takes time to consolidate all the original track Events into their original tracks, and then delete the empty tracks that result from moving so many Events into individual tracks.

Figure 3: These files have all been moved to their origin, so they line up properly on the timeline. However, exporting all audio Events as WAV files makes it time-consuming to reconstruct a Song, especially if the tracks were named ambiguously.

Option 2: Takes more time to prepare, takes up more storage space, but is much easier to load into an empty Song.

- Select the Events in one audio track, and type Ctrl+B to join them together into a single Event in the track. If this causes clipping, you’ll need to reduce the Event gain by the amount that the level is over 0. Repeat this for the other audio tracks.

- Joining each track creates Events that start at the first Event’s start, and end at the last Event’s end. This uses more memory than Option 1 because if two Events are separated by an empty space of several measures, converting them into a single Event now includes the formerly empty space as track data (Fig. 4).

Figure 4: Before archiving, the Events in individual tracks have now been joined into a single track Event by selecting the track’s Events, and typing Ctrl+B.

- Select all the files, and drag them to your “Raw Tracks” folder with the Wave File option selected.

After dragging the files back into an empty Song, select all the files, and then after choosing Edit > Move to Origin, all the files will line up according to their time stamps, and look like they did in Fig. 4. Compare this to Fig. 3, where the individual, non-bounced Events were exported.

Option 3: Universal, fast to prepare, but takes up the most storage space.

When collaborating with someone whose program can’t read Broadcast WAV Files, all imported audio files need to start at the beginning of the Song so that after importing, they’re synched on the timeline. For collaborations it’s more likely you’ll export Stems, as we’ll cover in Part 2, but sometimes the following file type is handy to have around.

- Make sure that at least one audio Event starts at the beginning of the song. If there isn’t one, use the Pencil tool to draw in a blank Event (of any length) that starts at the beginning of any track.

Figure 5: All tracks now consist of a single Event, which starts at the Song’s beginning.

- Select all the Events in all audio tracks, and type Ctrl+B. This bounces all the Events within a track into a single track, extends each track’s beginning to the beginning of the first audio Event, and extends each track’s end to the end of the longest track (Fig. 5). Because the first Event is at the Song’s beginning, all tracks start at the Song’s beginning.

- Select all the Events, and drag them into the Browser’s Raw Tracks folder (again, using the Wave File option).

When you bring them back into an empty Song, they look like Fig. 5. Extending all audio tracks to the beginning and end is why they take up more memory than the previous options. Note that you will probably need to include the tempo when exchanging files with someone using a different program.

To give a rough idea of the memory differences among the three options, here are the results based on a typical song.

Option 1: 302 MB

Option 2: 407 MB

Option 3: 656 MB

You’re not asleep yet? Cool!! In Part 2, we’ll take this further, and conclude the archiving process.

Add Studio One to your workflow today and save 30%!

How to Obtain the Perfect Fadeout

For most song fadeouts, I prefer an S-shaped fade.

It’s also great with audio-for-video productions because I usually use an S-fade for the video, so matching that with an equivalent audio fade works well. Although the Project Page allows only logarithmic or exponential fades for the clips that represent mixed songs, there’s nonetheless an easy way to add S-shaped fades to the Project Page’s songs.

This technique involves adding an S-fade during the final stages of mixing in the Song Page, and then updating the mastering file so that the Project Page version incorporates the fade.

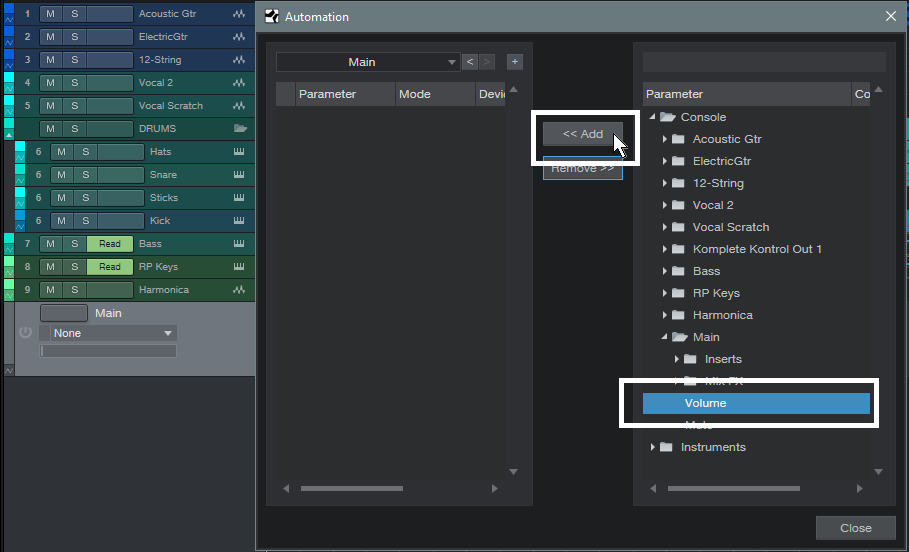

- Add an Automation track.

- Assign it to the Main Volume (Fig. 1). If there’s already automation for the Main volume, no problem; you can still add the S-fade, as described next.

Figure 1: Create an automation track, and assign it to the Console’s Main Volume parameter.

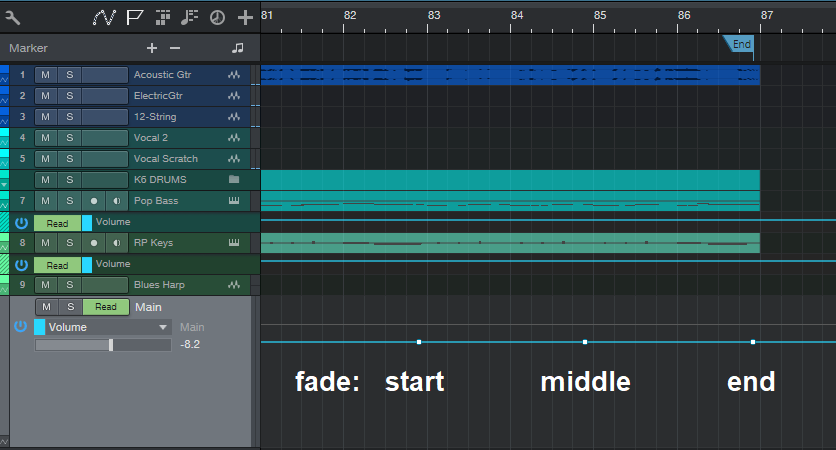

- Add nodes on the automation envelope where you want the fade to start and where you want it to end, as well as a node exactly in the middle of those two nodes (Fig. 2).

Figure 2: Adds nodes at the fade’s intended start, middle, and end.

- Drag the end node all the way to the minimum level, which is the end of the fadeout.

- Drag the middle node to halfway between the start and end node levels.

- Drag the line between the start and middle nodes upward, to create a logarithmic fade.

- Drag the line between the middle and end nodes downward, to create an exponential fade down to the fadeout’s minimum level (Fig. 3).

Figure 3: How to drag the nodes, and adjust their curves, for an S-shape fade.

And there you have it—an S-shaped fadeout. What’s more, unlike programs with a fixed S-shape fade, you can alter the shape for the first and second curves. For example, maybe you want a fairly quick fade out at first but then extend the final fade.

After creating the fade, now all you need to do is update your mastering file, and the song in the Project Page will incorporate your perfect, S-shaped fade.

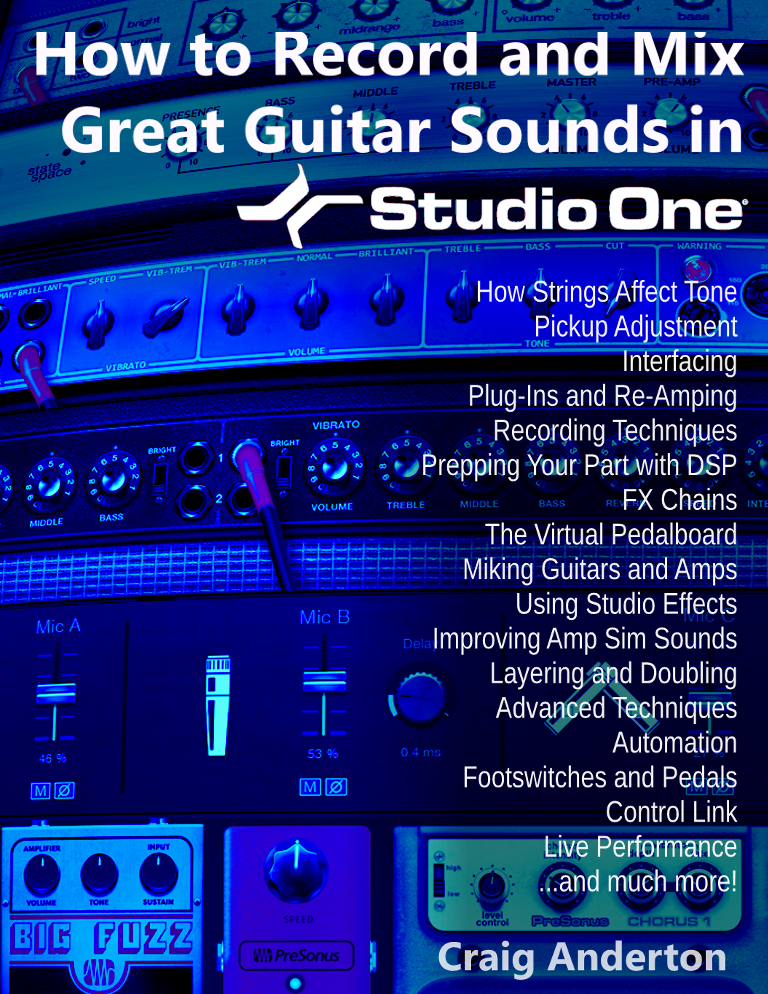

Before signing off this week, I wanted to mention there’s a new Studio One eBook out—How to Record and Mix Great Guitar Sounds in Studio One. It’s 274 pages and covers everything from how strings and pickups affect tone to getting the most out of the latest Ampire version (and a whole lot more). You can preview the table of contents here.

Four VCA Channel Applications

A VCA Channel has a fader, but it doesn’t pass audio. Instead, the fader acts like a gain control for other channels, or groups of channels. In some ways, you can think of a VCA Channel as “remote control” for other channels. If you assign a VCA to control a channel, you can adjust the channel gain, without having to move the associated channel’s fader. The VCA Channel fader does it for you.

Inserting a VCA channel works the same way as inserting any other kind of channel or bus. (However, there’s a convenient shortcut for grouping, as described later.) To place a channel’s gain under VCA control, choose the VCA Channel from the drop-down menu just below a channel’s fader…and let’s get started with the applications.

APPLICATION #1: EASY AUTOMATION TRIM

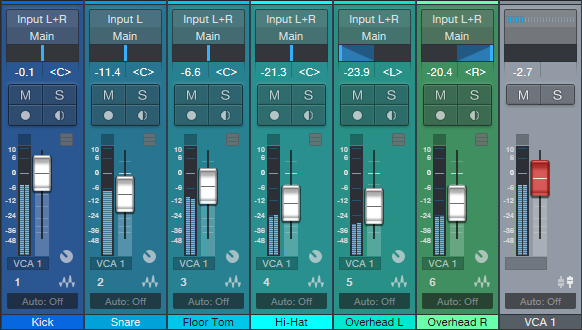

Sometimes when mixing, you’ll create detailed level automation where all the moves and changes are perfect. But as the mix develops, you may find you want to increase or decrease the overall level. There are several ways to do this, like inserting a Mixtool and adjusting the level independently of the automation, or using the automation’s Trim control. However, a VCA control is sometimes easier, and besides, it can control several channels at once if desired, without having to feed them to a bus. The VCA fader can even offset automation for multiple tracks that are located within a Folder Track (Fig. 1)

- Figure 1: Note how the label below each fader says VCA 1. This means each channel’s gain is being controlled by the VCA 1 fader on the right. If all these tracks have their own automation, VCA 1 can bring the overall level up or down, without altering the automation shape, and without needing to send all their outputs to the same bus.

If the automation changes are exactly as desired, but the overall level needs to increase or decrease, offset the gain by adjusting the VCA Channel’s fader. This can be simpler and faster than trying to raise or lower an entire automation curve using the automation Trim control. Furthermore, after making the appropriate adjustments, you can hide the VCA Channel to reduce mixer clutter, and show it only if future adjustments are necessary.

APPLICATION #2: NESTED GROUPING

One of the most common grouping applications involves drums—when you group a drum kit’s individual drums, once you get the right balance, you can bring their collective levels up or down without upsetting the balance. Studio One offers multiple ways to group channels. The traditional option is to feed all the outputs you want to group to a bus, and vary the level with the bus fader. For quick changes, a more modern option is to select the channels you want to group, so that moving one fader moves all the faders.

But VCAs take this further, because VCA groups can be nested. This means groups can be subsets of other groups.

A classic example of why this is useful involves orchestral scoring. The first violins could be assigned to VCA group 1st Violins, the second violins to VCA group 2nd Violins, violas to VCA group Violas, and cellos and double basses to VCA group Cellos+Basses.

You could assign the 1st Violins and 2nd Violins VCA groups to a Violins Group, and then assign the Violins group, Violas group, and Cellos+Basses group to a Strings group. Now you can vary the level of the first violins, the second violins, both violin sections (with the Violins Group), the violas, the cellos and double basses, and/or the entire string section (Fig. 2). This kind of nested grouping is also useful with choirs, percussion ensembles, drum machines with multiple outputs, background singers, multitracked drum libraries, and more.

Figure 2: The 1st Violins and 2nd Violins have their own group, which are in turn controlled by the Violins group. Furthermore, the Violins, Violas, and Cellos+Basses groups are all controlled by the Strings group.

Although it may seem traditional grouping with buses would offer the same functionality, note that all the channel outputs would need to go through the same audio bus. Because VCA faders don’t pass audio, any audio output assignments for the channels controlled by the VCA remain independent. You’re “busing” gain, not audio outputs—that’s significant.

If you create a group, then all the faders within that group remain independent. Although with Studio One you can temporarily exclude a fader from a group to adjust it, that’s not necessary with VCA grouping—you can move a fader independently that’s controlled by a VCA, and it will still be linked to the other members of a VCA group when you move the VCA fader.

Bottom line: The easiest way to work with large numbers of groups is with VCA faders.

APPLICATION #3: GROUPS AND SEND EFFECTS

A classic reason for using a VCA fader involves send effects. Suppose several channels (e.g., individual drums) go to a submix bus fader, and the channels also have post-fader Send controls going to an effect, such as reverb. With a conventional submix bus, as you pull down the bus fader, the faders for the individual tracks haven’t changed—so the post-fader send from those tracks is still sending a signal to the reverb bus. Even with the bus fader down all the way, you’ll still hear the reverb.

A VCA Channel solves this because it controls the gain of the individual channels. Less gain means less signal going into the channel’s Send control, regardless of the channel fader’s position. So with the VCA fader all the way down, there’s no signal going to the reverb (Fig. 3)

- Figure 3: The VCA Channel controls the amount of post-fader Send going to a reverb, because the VCA fader affects the gain regardless of fader position. If the drum channels went to a conventional bus, reducing the bus volume would have no effect on the post-fader Sends.

APPLICATION #4: BUS VS. VCA

There’s a fine point of using VCAs to control channel faders. Suppose individual drums feed a bus with a compressor or saturation effect. As you change the channel gain, the input to the compression or saturation changes, which alters the effect. If this is a problem, then you’re better off sending the channels to a standard bus. But this can also be an advantage, because pushing the levels could create a more intense sound by increasing the amount of compression or saturation. The VCA fader would determine the group’s “character,” while the bus control acts like a master volume control for the overall group level.

And because a VCA fader can control bus levels, some drums could go to an audio bus with a compressor, and some drums to a bus without compression. Then you could use the VCA fader to control the levels of both buses. This allows for tricks like raising the level of the drums and compressing the high-hats, cymbals, and toms more, while leaving the kick and snare uncompressed…or increasing saturation on the kick and snare, while leaving other drum sounds untouched.

Granted, VCA Channels may not be essential to all workflows. But if you know what they can do, a VCA Channel may be able to solve a problem that would otherwise require a complex workaround with any other option.

Tremolo: Why Be Normal?

Tremolo (not to be confused with vibrato, which is what Fender amps call tremolo), was big in the 50s and 60s, especially in surf music—so it has a pretty stereotyped sound. But why be normal? Studio One’s X-Trem goes beyond what antique tremolos did, so this week’s Friday Tip delves into the cool rhythmic effects that X-Trem can create.

TREMOLOS IN SERIES

The biggest improvement in today’s tremolos is the sync-to-tempo function. One of my favorite techniques for EDM-type music is to insert two tremolos in series (Fig. 1).

Figure 1: These effects provide the sound in Audio Example 1. Note the automation track, which is varying the first X-Trem’s Depth parameter.

The first X-Trem runs at a fast rate, like 1/16th notes. Square wave modulation works well for this if you want a “chopped” sound, but I usually prefer sine waves, because they give a smoother, more pulsing effect. The second X-Trem runs at a slower rate. For example, if it syncs to half-notes, X-Trem lets through a string of pulses for a half-note, then attenuates the pulses for the next half-note. Using a sine wave for the second tremolo gives a rhythmic, pulsing sound that’s effective on big synth chords—check out the audio example.

BUILD YOUR OWN WAVEFORM

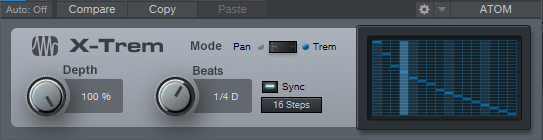

X-Trem’s waveforms are the usual suspects: Triangle, Sine, Upward Sawtooth, and Square. But what if you want a downward sawtooth, a more exponential wave (Fig. 2), or an entirely off-the-wall waveform?

Figure 2: Let’s have a big cheer for X-Trem’s 16 Steps option.

This is where the 16 Steps option becomes the star (Fig. 2) because you can draw pretty much any waveform you want. It’s a particularly effective technique with longer notes because you can hear the changes distinctly.

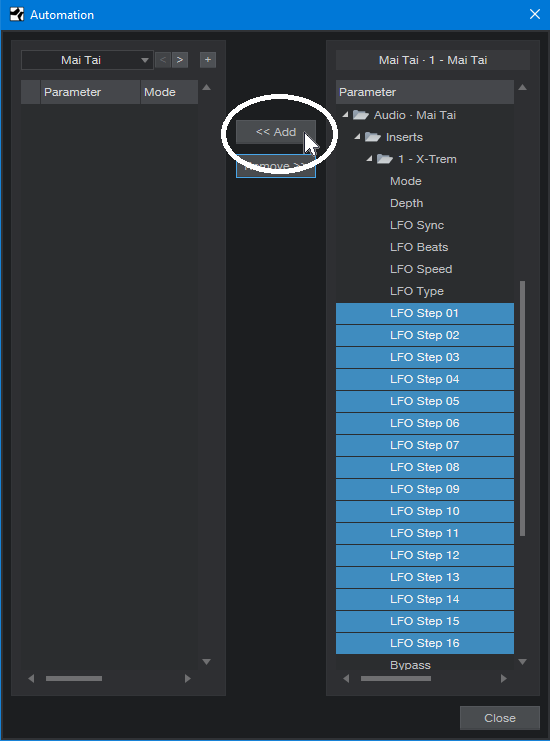

But for me, the coolest part is X-Trem’s “Etch-a-Sketch” mode, because you can automate each step individually, choose X-Trem’s Automation Write, and go crazy. Just unfold X-Trem’s automation options, choose all the steps, add them to the track’s automation, and draw away (Fig. 3).

Figure 3: Drawing automated step changes in real-time takes X-Trem beyond “why be normal” into something that may be illegal in some states.

Of course, if you just draw kind of randomly, then really, all you’re doing is level automation. Where this option really comes into its own is when you have a basic waveform for one section, change a few steps in a different section and let that repeat, draw a different waveform for another section and let that repeat, and so on. Another application is trying out different waveforms as a song plays, and capturing the results as automation. If you particularly like a pattern, cut and paste the automation to use it repetitively.

And just think, we haven’t even gotten into X-Trem’s panning mode—similarly to its overachieving tremolo functions, the panning can do a lot more than just audio ping-pong effects. Hmmm…seems like another Friday Tip might be in order.

The “Double-Decker” Pre-Main Bus

This Friday tip has multiple applications—consider the following scenarios.

You like to mix with mastering processors in the Main bus to approximate the eventual mastered sound, but ultimately, you want to add (or update) an unprocessed file for serious mastering in the Project page. However, reality checks are tough. When you disable the master bus processors so you can hear the unprocessed sound you’ll be exporting, the level will usually change. So then you have to re-balance the levels, but you might not get them quite to where they were. And unfortunately, one of the biggest enemies of consistent mixing and mastering is varying monitoring levels. (Shameless plug alert: my book How to Create Compelling Mixes in Studio One, which is also available in Spanish, tells how to obtain consistent levels when mixing.)

Or, suppose you want to use the Tricomp or a similar “maximizing” program in the master bus. Although these can make a mix “pop,” there may be an unfair advantage if they make the music louder—after all, our brains tend to think that “louder is better.” The only way to get a realistic idea of how much difference the processor really makes is if you balance the processed and unprocessed levels so they’re the same.

Or, maybe you use the cool Sonarworks program to flatten your headphone or speaker’s response, so you can do more translatable mixes. But Sonarworks should be enabled only when monitoring; you don’t want to export a file with a correction curve applied. Bypassing the Sonarworks plug-in when updating the Project page, or exporting a master file, is essential. But in the heat of the creative moment, you might forget to do that, and then need to re-export.

THE “DOUBLE-DECKER,” PRE-MAIN BUS SOLUTION

The Pre-Main bus essentially doubles up the Main bus, to create an alternate destination for all your channels. The Pre-Main bus, whose output feeds the Main bus, serves as a “sandbox” for the Main bus. You can insert whatever processors you want into the Pre-Main bus for monitoring, without affecting what’s ultimately exported from the Main bus.

Here’s how it works.

- Create a bus, and call it the Pre-Main bus.

- In the Pre-Main bus’s output field just above the pan slider, assign the bus output to the Main bus. If you don’t see the output field, raise the channel’s height until the output field appears.

- Insert the Level Meter plug-in in the Main bus. We’ll use this for LUFS level comparisons (check out the blog post Easy Level Matching, or the section on LUFS in my mixing book, as to why this matters).

Figure 1: The Pre-Main bus, outlined in white, has the Tricomp and Sonarworks plug-ins inserted. Note that all the channels have their outputs assigned to the Pre-Main bus.

- Insert the mastering processors in the Pre-Main bus that you want to use while monitoring. Fig. 1 shows the Pre-Main bus with the Tricomp and Sonarworks plug-ins inserted.

- Select all your channels. An easy way to do this is to click on the first channel in the Channel List, then shift+click on the last channel in the list. Or, click on the channel to the immediate left of the Main channel, and then shift+click on the first mixer channel.

With all channels selected, changing the output field for one channel changes the output field for all channels. Assign the outputs to the Main bus, play some music, and look at the Level Meter to check the LUFS reading.

Now assign the channel outputs to the Pre-Main bus. Again, observe the Level Meter in the Master bus. Adjust the Pre-Main bus’s level for the best level match when switching the output fields between the Main and Pre-Main bus. By matching the levels, you can be sure you’re listening to a fair comparison of the processed audio (the Pre-Main bus) and the unprocessed audio that will be exported from the Main bus.

The only caution is that when all your channels are selected, if you change a channel’s fader, the faders for all the channels will change. Sometimes, this is a good thing—if you experience “fader level creep” while mixing, instead of lowering the master fader, you can lower the channel levels. But you also need to be careful not to reflexively adjust a channel’s level, and end up adjusting all of them by mistake. Remember to click on the channel whose fader you want to adjust, before doing any editing.

Doubling up the Main bus can be really convenient when mixing—check it out when you want to audition processors in the master bus, but also, be able to do a quick reality check with the unprocessed sound to find out the difference any processors really make to the overall output.

Acknowledgement: Thanks to Steve Cook, who devised a similar technique to accommodate using Sonarworks in Cakewalk, for providing the inspiration for this post.

Melodic Percussion

This week’s tip shows how to augment percussion parts by making them melodic—courtesy of Harmonic Editing.

The basic idea is that setting a white or pink noise track to follow the chord track gives the noise a sense of pitch. Although having a long track of noise isn’t very interesting, if we gate it with a percussion part, then now we’ve layered the percussion part’s rhythm with the tonality of the noise. Add a little dotted 8th note echo, and it can sound pretty cool.

Step 1: Bring on the Noise

Noise needs to be recorded in a track to be affected by harmonic editing, so open up the mixer’s Input section, and insert a Tone Generator effect in tracks 1 and 2. Set the Tone Generator to Pink Noise, and trim the level so it’s not slamming up against 0 (Fig. 1).

Figure 1: We need noise in each channel to implement this technique.

Record-enable both tracks (set them to Mono channel mode), enable Input Monitor, and start recording noise into the tracks. The reason for recording into two tracks is we want to end up with stereo noise, so the tracks can’t be identical.

Step 2: Make the Noise Stereo

Now that the noise is recorded, you can remove the Tone Generator effects from the track inputs. At the mixer, pan one channel of noise left, and one right. In each track’s Inspector, choose Universal Mode for Follow Chords, and Strings for Tune mode (Fig. 2).

Figure 2: How to set up the tracks for stereo noise. The crucial Inspector settings are outlined in yellow.

Set each track’s output to a Bus, and now we have stereo noise at the Bus output. Insert a Gate in the Bus, and any other effects you want to use (I insert a Pro EQ to trim the highs and lows somewhat, and a Beat Delay for a more EDM-like vibe—but use your imagination).

Step 3: Control the Gate’s Sidechain

Choose the percussion sound with which you want to control the Gate sidechain, insert a pre-fader send in the percussion track, assign the send to the Gate, and then adjust the Gate parameters so that the percussion track modulates the noise percussively. Fig. 3 shows the track setup.

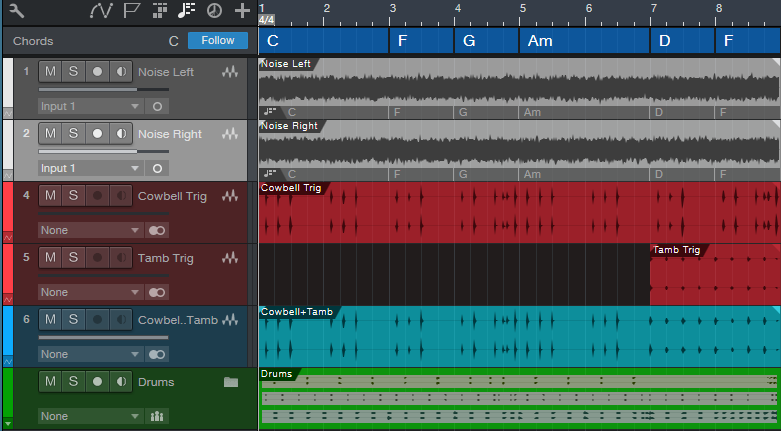

Figure 3: Track layout used in the audio example.

Tracks 1 and 2 are the mono noise tracks that follow the Chord Track, and feed the Bus. Tracks 4 and 5 both have pre-fader sends to control the Gate, so that for the first 7 measures only the cowbell controls the gate, but at measure 8, a tambourine part also modulates the Gate.

Track 6 has the cowbell and tambourine audio, which is mixed in with the pitched noise, while the folder track has the kick, snare, and hi-hat loops. (The reason for not using post-fader sends on the percussion tracks is so that the tracks controlling the Gate are independent of the audio, which you might want to process differently.)

But Wait…There’s More!

With a longer gate, the sound is almost like the rave organ sound that was so big at the turn of the century. And there are options other than gating, like using X-Trem…or following the Gate with X-Trem. Or draw a periodic automation level waveform for the bus, and use the Transform function to make everything even weirder. In any case, now you have a new, and unusual tool, for percussive accents.

Keyboard Meets Power Chords

Hey keyboard players!

Do you feel kind of left out because of the cool guitar amps that Studio One added in version 4.6? Well, this week’s tip is all about having fun, and bringing power chord mentality to keyboard, courtesy of those State Space amps. Listen to the audio example, and you’ll hear what I’m talking about.

And so you can get started having fun, you don’t even have to learn what’s going on to get that sound you just heard. Download Power Chordz.instrument, drag it into the track column, feed it from your favorite MIDI keyboard, and start playing.

Now, I know some of you prefer just to download a preset and get on with your life, and that’s fine—but for those who want some reverse engineering, here’s what’s under the hood.

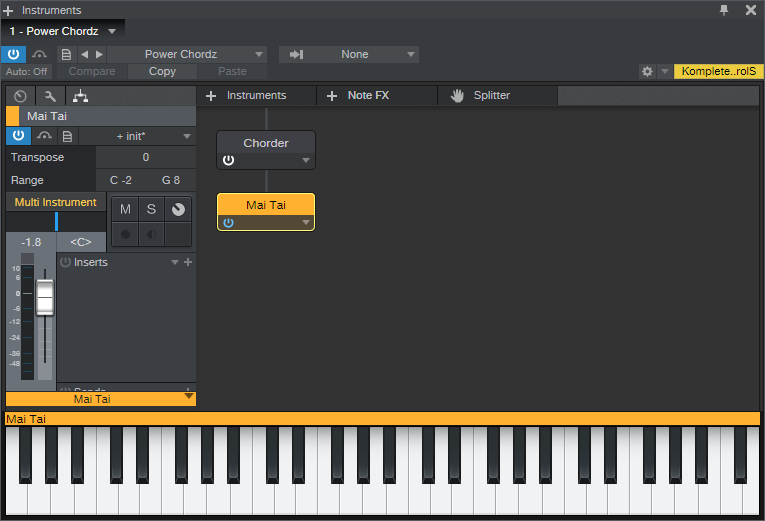

Figure 1: The Multi-Instrument is pretty basic—it just bundles a Chorder Note FX and Mai Tai together.

The preset starts with a Multi-Instrument (Fig. 1) that consists of the Chorder Note FX, and Mai Tai synthesizer. The Chorder plays tonic, fifth above, an octave above, octave+fifth above, and two octaves above when you hit a keyboard key—your basic “it’s not major, and it’s not minor” type of power chord.

The Mai Tai uses a super-simple variation on the Init preset. In Fig. 2, anything that’s not relevant is grayed out. Turn off Osc 2, Noise, LFO 1, and LFO 2. There’s no modulation other than pitch bend, and no FX. Envelope 2 and Envelope 3 aren’t used. I set Pitch Bend to 7 semitones to do whammy bar effects, but adjust to taste. Also, you might want to play around with the Quality parameter. I’m allergic to anything called “normal,” so if you are as well, try the 80s, High, and Supreme settings to see if you like one of those better.

Figure 2: The Mai Tai preset uses simple waveforms, which is what you want when feeding amp sims and other distortion-oriented plug-ins.

Look in the instrument’s mixer channel, and you’ll see four Insert effects: Pro EQ, Ampire, Open Air, and Binaural Pan. You can check out their settings by opening them up, but the Ampire settings (Fig. 3) deserve a bit of explanation.

Figure 3: Ampire is using the Dual Amplifier and 4×12 MFB speaker cabinet, but just about any amp and cab has their merits.

The reason for choosing the Dual Amplifier is because it’s really three amps in one, as selected by the Channel knob on the right—I figured you’d appreciate having three separate sounds without having to do anything other than adjust one knob. Try different cabs and amps, but be forewarned—you can really go down an Endless Rabbit Hole of Tone, because there are a lot of great amp and cab sounds in there. I’ll admit that I ended up playing with various permutations and combinations of amps, effects, and cabs for hours.

You can also get creative with the Mai Tai, specifically, the Character controls. I didn’t assign any controls to a Control Panel, or set up modulation because having a pseudo-”whammy” bar pitch wheel was enough to keep me occupied. But, please feel free to come up with your own variations. And of course…post your best stuff on the PreSonus Exchange!

Up Your Expressiveness with Upward Expansion

Many people don’t realize there are two types of expansion. Downward expansion is a popular choice for minimizing low-level noise like hiss and hum. It’s the opposite of a compressor: compression progressively reduces the output level above a certain threshold, while a downward expander progressively reduces the output level below a certain threshold. For example, with 2:1 compression, a 2 dB input level increase above the threshold yields a 1 dB increase at the output. With 1:2 expansion, a 1 dB input level decrease below the threshold yields 2 dB of attenuation at the output.

Upward expansion doesn’t alter the signal’s linearity below the threshold—if the input changes by 2 dB, the output changes by 2 dB. But above the threshold, levels increase. For example, with 1:2 expansion, a 1 dB increase above the threshold becomes 2 dB of increase at the output. Fig. 1 shows the difference between downward and upward expansion.

Figure 1: The top screen shot shows how downward expansion attenuates levels below a threshold, while the lower one shows how upward expansion increases levels above a threshold.

That’s Nice…So What?

Upward expansion is a useful tool for drums, hand percussion, and other percussive instruments. One function is transient shaping, to emphasize attacks. Suppose you have a drum loop with too much room sound. Traditional expansion can make the room sound decay faster, but using upward expansion brings the peaks above the room sound, while leaving the characteristic room sound alone.

Another use is with percussion parts, like hand percussion, that are playing along with drums. A lot of times you don’t want the percussion hits to be too uniform in level, but instead, the most important hits should be a little louder compared to the rest of the part. Again, that’s where upward expansion shines. Dip the threshold just a tiny bit below the peaks—the peaks will stand out, and sound more dynamic.

Let’s listen to an audio example. The first two measures use no upward expansion with a drum track. The next two measures add a subtle amount of upward expansion. You’ll hear that the peaks from the kick and snare are still prominent, but the room sound and cymbals are a bit lower by comparison. The final two measures use the settings shown in Fig. 2. The kick and snare peaks are still there, but the rest of the part is more subdued, and the overall sound is “tighter,” with more dynamics.

Figure 2: Expander settings for the Upward Expansion Demo.

The only difference among the two-measure sections is the Range control setting. For the first two measures, it’s 0.00 dB; nothing can be above the threshold, because there is no threshold. In the second two measures, the Range is -2.00, so anything above that threshold goes through 1:4 expansion. In the final two measures, the range is -4.00 (I rarely take it lower, as long as the Event hits close to 0 on peaks).

Here’s the coolest part: Automating the Range parameter lets you alter a drum part’s dynamics and feel, without having to change the part itself. This is particular wonderful for compressed drum loops, because you can lower the range to keep the peaks, while making the rest of the loop less prominent. When you want a big sound, slam the Range back up to 0.00.

But Wait! There’s More!

The Multiband Dynamics processor can do frequency-selective upward expansion. You can isolate just the high frequencies where a drum stick hits, and emphasize only that frequency. Another use is making acoustic guitars sound more percussive, as in this audio example.

The first two measures are the original acoustic guitar track, and the next two use Multiband Dynamics to accent the strums (Fig. 3).

Figure 3: This setup takes advantage of the Multiband Dynamics’ ability to add upward expansion to a specific frequency range. Note the Input level control adding gain (outlined in white).

The Multiband Dynamics are in a separate, parallel track (you could build this into an FX Chain, but I think showing this in two channels illustrates the process better). Because the Multiband Dynamics is listening to only the high frequencies, which are quite weak and not sufficient to go over the expander threshold, the Input control is adding +10 dB of gain. Alternately, you could insert a Mixtool before the Multiband Dynamics.

This effect is best when used subtly, but next time you want to reach for a transient shaper, try this instead. It’s a flexible way to emphasize percussive hits and strums.

Taming the Wild Autofilter

I think the Autofilter is a great effect—which you probably already figured out if you saw my blog posts The Best Flanger Plug-In?, Attack that Autofilter, and Studio One’s Secret Equalizer. But the one effect that has always eluded me was the Autofilter effect itself, when used with guitar or bass. It never seemed to cover quite the right range—like it wouldn’t go high enough if I hit the strings hard, but if I compensated for that by turning up the filter cutoff then it wouldn’t go low enough. Furthermore, the responsiveness varied dramatically depending whether I was playing high up on the neck, or hitting low notes on the E and A strings. So basically, I’ve never really used the Autofilter for its intended purpose—until now, because I’ve finally figured out the recipe. Hey, better late than never!

This technique involves dedicating two tracks to the same guitar audio—the Autofilter processes one of the tracks, while the other track provides a pre-fader send to the Autofilter’s sidechain (Fig. 1). By processing the send, we can make the Autofilter respond pretty much any way we want.

Figure 1: Both tracks are being fed from the guitar audio. The track on the right processes the audio to control the sidechain of an Autofilter, which is inserted in the track on the left.

The Autofilter (Fig. 2) has a lower filter cutoff than what I would normally use, were it not for this technique; the envelope amount slider is up all the way (the LFO is at zero, so it doesn’t influence the envelope effect).

Figure 2: Initial Autofilter settings, when controlled by a processed sidechain signal.

As to what’s conditioning the send to make the Autofilter happy, it’s the underappreciated Channel Strip plug-in (Fig. 3). The strip is both compressing and expanding because, well, that’s what ended up sounding right. But the key here is also the EQ. The higher-output low strings are attenuated, so that the filter response for the lower strings is consistent with the upper strings—thanks to the massive high-frequency boost. Meanwhile, the Gain is slammed all the up, so that it drives the Autofilter to a suitably high frequency with strong input signals.

Figure 3: The Channel Strip is ideal for conditioning the signal controlling the Autofilter sidechain.

Here’s another tip: The technique of duplicating a track, and processing it to provide a custom sidechain signal, has a lot more uses than just this. Try using the X Trem as a step sequencer and control the sidechain in a compressor…or the Autofilter’s sidechain, for that matter.

Remember, if you want to come up with something novel, ask “what if?”—not so much “how to?” I guarantee you won’t find a single, click-bait YouTube video called “SECRET AUTOFILTER PRO TRICK YOU MUST KNOW!” I’d never claim this is a tip the pros use; the only reason I came up with it is because I was frustrated that I couldn’t get the Autofilter to do what I wanted, and thought “What if I process the signal going to sidechain?” I can’t help but wonder how many other “what ifs” are waiting to be discovered…well, see you next week!